COMP 3511: Lecture 9

Date: 2024-10-03 15:02:14

Reviewed:

Topic / Chapter:

summary

❓Questions

Notes

Multi-Threads Basics

-

Thread

- many app / programs: multithreads

- e.g. web browser: some displaying images, some retrieving data from internet

- or: each tabs in a browser

- to perform tasks efficiently

- e.g. web browser: some displaying images, some retrieving data from internet

- most OS kernels: multithreads

- e.g. multiple kernel threads created Linux system boot time

- motivation: can take advantage of processing capabilities on multicore systems

- i.e. parallel programming: often in data mining, graphics, AI

- process creation: time consuming and resource intensive

- thread creation: light-weight

- as thread shares code, data, and others within the process

- many app / programs: multithreads

-

Multithreaded program examples

- embedded systems

- elevators, planes, medical systems, etc.

- single program on concurrent operations

- most modern OS kernels

- internally concurrent: to deal w/ concurrent requests by multiple users

- no internal protection needed in kernel

- DB servers

- access to shared data of many current users

- background utility processing: must be done

- network servers

- concurrent requests from network

- file server / web server / airline reservation systems

- parallel programming

- split program & data into multiple threads for parallelism

- embedded systems

-

Single & multithreaded processes

- thread: basic unit of CPU utilization

- independently scheduled & run - instance of execution

- represented by

- thread ID

- program counter

- register set

- stack

- shares code, data, and OS resources: e.g. open files & signals

- efficiency: depends on no. of cores allocated to the process

- benefits

- responsiveness

- resource sharing

- threads: share process resource by default

- easier than inter-process shared memory / message passing

- as: threads run within the same address space

- economy

- thread creation: cheaper than process creation

- context switch: faster intra-process (thread) than inter-process

- scalability

- processes: can take advantage of multicore architecture

- thread: basic unit of CPU utilization

Multicore & Parallelism

-

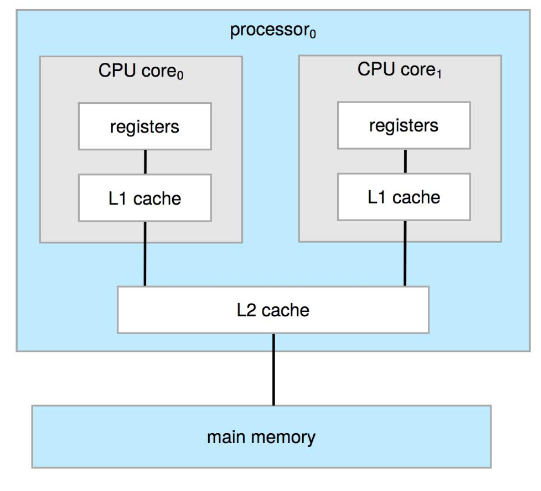

Multicore design

- multithreaded programming: provides mechanism for more eff. use of multi-cores

- and improves concurrency in multicore systems

- ⭐👨🏫 L2 cache / memory are shared by cores

- multithreaded programming: provides mechanism for more eff. use of multi-cores

-

Multicore programming

- significant new challenges in multicore/multiprocess programming design

- dividing tasks: how to divide into separate / concurrent tasks

- balance: each task performing equal amount of work

- data splitting: data used by tasks: divided to run on separate cores

- 👨🎓 dividing seed among farmers to work concurrently

- data dependency: synchronization required if data dependency exist

- e.g. operation B: requiring completion of operation A

- testing and debuggingL different path of executions: makes debugging difficult

- parallelism != concurrency

- parallelism: system can perform more than one task simultaneously

- concurrency: supporting more than one task for making progress

- i.e. doing more than one job over a time

- when multiplexed over time: single process core, scheduler provides concurrency

- advent of multicore: requires new approach in software system design

- significant new challenges in multicore/multiprocess programming design

-

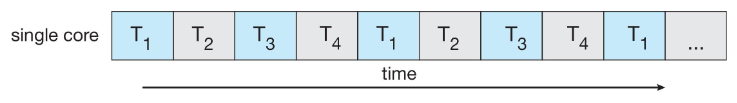

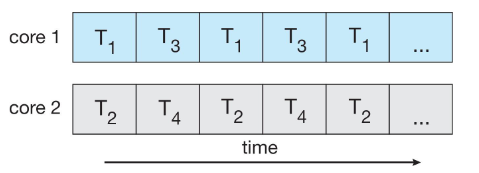

Concurrency vs. parallelism

- concurrent: single core multiplexing over time

- parallel: execution on a multi-core system

- 👨🏫 rigorously, parallelism only exists in multicore

- but many literatures don't differentiate them

- concurrent: single core multiplexing over time

-

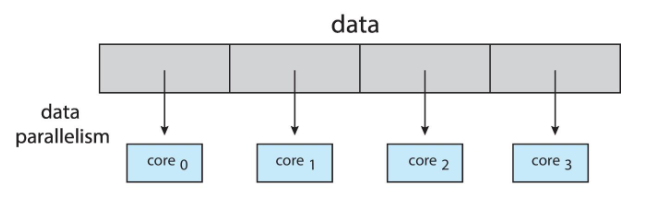

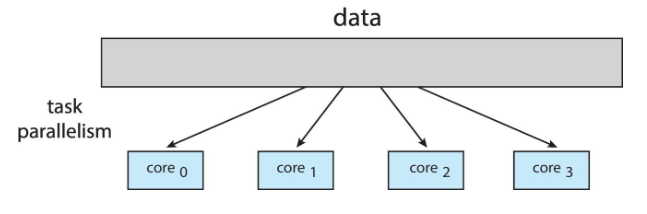

Data & task parallelism

- data parallelism: distribute subset of data across multiple cores

- same operation on each core

- common: in distributed machine learning tasks

- task parallelism: distribute: threads across core

- each thread: performing unique operation

- data & task: not mutually exclusive: could be used at the same time!

- data parallelism: distribute subset of data across multiple cores

-

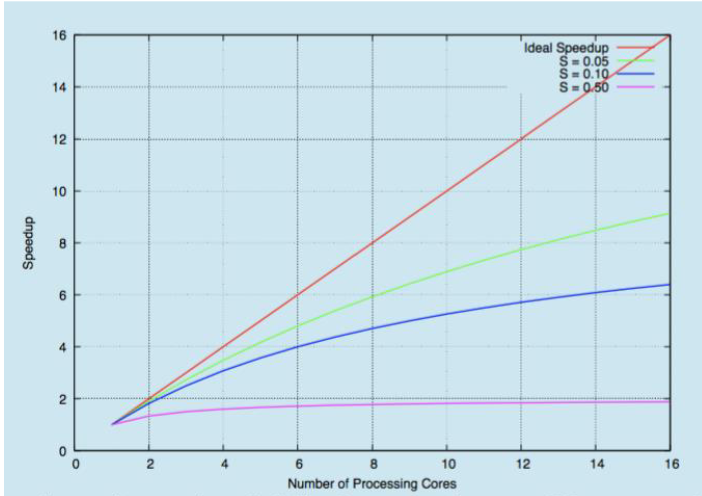

Amdahl's law

- designs performance gain from additional cores to an application

- w/ both serial and parallel components

- : serial portion

- : parallel portion

- : processing cores

- speedup

- as approaches infinity: speedup approaches

- serial portion of application: disproportionate effect

- on performance gained by adding additional cores

- e.g. if application if 75% parallel, 25% serial

- then moving from 1 to 2 cores: give speedup of

- 👨🏫 even if you have 5% of serial portion: speedup decreases significantly

- designs performance gain from additional cores to an application

Threads

-

Multithreaded processes

- multithreaded process: more than one instance of execution (i.e. threads)

- thread: similar to process, but shares the same address space

- state of each thread: similar to process

- with its of program counter and private set of register for execution

- two thread running on single process: switching from threads requires context switch, too

- register state of a thread: saved, and another thread's is loaded / restored

- address space: remains the same - much smaller context switch

- and thus much small overhead

- thus parallelism is supported

- enables overlap of IO with other each activities within a single program

- one thread: running on CPU; another thread doing IO

-

Thread

- thread: single unique execution context: lightweight process

- program counter, registers, execution flags, stack

- thread: executing on processor when it resides i registers

- PC register: holds address of currently executing instruction

- register: holds root state of the thread (other state in memory)

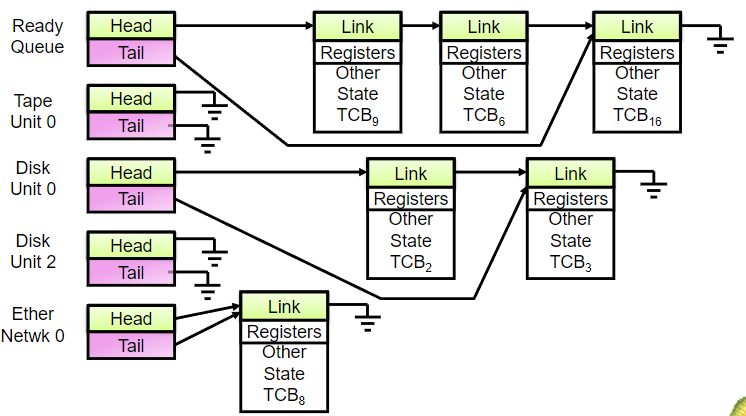

- each thread: w/ thread control block (TCB)

- execution state: CPU registers, program counter, pointer to stack

- scheduling info: state, priority CPU time

- accounting info

- various pointers: for implementing scheduling queues

- pointer to enclosing process: PCB of belonging process

- thread: single unique execution context: lightweight process

-

Thread state

- thread: encapsulates concurrency - active component of a process

- address space: encapsulates protection - passive part of process

- one program's address space: different from another's

- i.e. keeping buggy program from thrashing entire system

- state shared by all threads in a process / address space

- contents onf emory (global variables, heap)

- IO state (file descriptors, network connections, etc.)

- state not shared / private to each thread

- kept in TCB: thread control block

- CPU registers (including PC)

- execution stack: parameters, temporary variables, PC saved

-

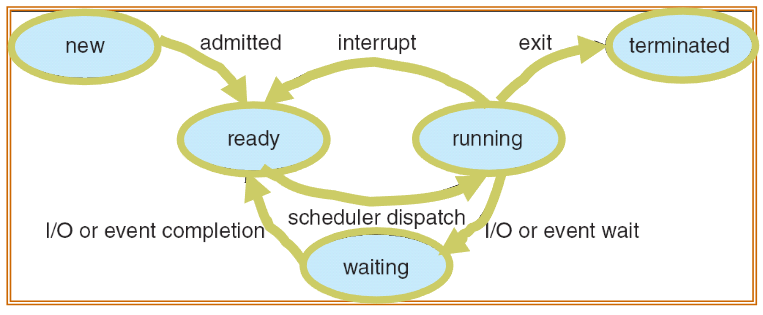

Lifecycle of a thread

- change of state

new: thread being createdreadythread waiting to runrunning: instructions being executedwaiting: thread waiting for event to occurterminated: thread finished execution- or: killed by OS / user

- active threads: represented by TCBs

- TCBs: organized into queues based on states

-

Ready queue and IO device queues

- thread not running: TCB is in some queue

- queue for each device / signal / condition: w/ their own scheduling policy

- more accurate visualization of ready queue (instead of PCB, w/ TCB)

- thread not running: TCB is in some queue

-

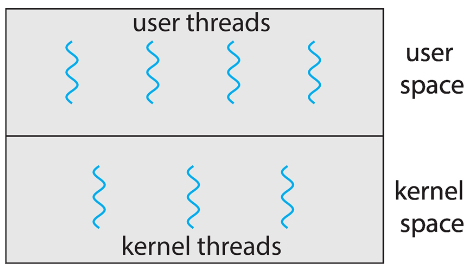

User threads and kernel threads

- user threads: independently executable entity within a program

- created & managed by user-level threads library

- users: only visible to programmers

- kernel threads: can be scheduled & executed on a CPU

- supported & managed by OS

- users: only visible to OS

- example: virtually all-general purpose OS

- Windows, Linux, Max OS X iOS, Android

- user threads: independently executable entity within a program

-

Multithreading models

- as user threads: invisible to kernel

- => cannot be scheduled to run on a CPU

- OS: only manages & schedules kernel threads

- thread libraries: provide API for creating & managing user threads

- primary libraries

- POSIX Pthreads - POSIX API

- Windows threads - Win32 API

- Java threads - Java API

- primary libraries

- user-level thread: providing concurrent & modular entities

- in a program that OS can take advantage of

- 👨🎓 here! this can be done in parallel manner!

- if no user threads defined: kernel threads can't be scheduled either

- ❓ are kernel threads assigned on runtime?

- 👨🏫 yes; user threads are static though

- cycles of threads / control / etc.: applies to kernel threads

- in a program that OS can take advantage of

- example

pthread_t tid; /* creating thread */ pthread_create(&tid, 0, worker, NULL) - ultimately: mapping must exist for user-kernel threads

- common ways:

- many-to-one: many user-level thread to one kernel thread

- one-to-one: one user-level thread for a kernel thread

- most common in modern OS

- many-to-many: many user-level thread to many (usually smaller no. of) kernel thread

- as user threads: invisible to kernel