Introduction

information

These are my notes on COMP 3511: Operating Systems As I was enrolled to the course only after the add-drop, some of my early notes (lecture 1-4; lab 1) might be less organized.

Course information

- Course code: COMP 3511

- Course title: Operating Systems

- Semester: 24/25 Fallcon

- Credit: 3

- Grade: A-F

- TMI

- Prerequisites: COMP 2611 OR [(ELEC 2300 OR ELEC 2350) AND (COMP 2011 OR COMP 2012H)]

- Exclusion: N/A

Description

This is an introductory course on operating systems. The topics will include the basic concepts of operating systems, process and threads, inter-process communications, process synchronization, scheduling, memory allocation, page and segmentation, secondary storage, I/O systems, file systems, and protection. It contains the key concepts as well as examples drawn from a variety of real systems such as Microsoft Windows and Linux.

My section

- Section: L2 / LA2

- Time:

- Lecture: TuTh 03:00PM - 04:20PM

- Lab: Mo 03:00PM - 04:50PM

- MT: October 25, 06:30PM - 09:30PM

- Venue:

- Lecture: LT-G

- Lab: G010, CYT Bldg

- Instructor: Li, Bo

- email: [email protected]

- Teaching Assistants:

- Project

- Peter CHUNG ([email protected])

- PA 1-3 related questions

- Tao FAN ([email protected])

- PA 1 grading & appealing

- Tianyi BAI ([email protected])

- PA 2,3 grading & appealing

- Peter CHUNG ([email protected])

- Homework

- Jingcan CHEN ([email protected])

- HW 1 grading & appealing

- Feiyuan ZHANG ([email protected])

- HW 2 grading & appealing

- Xingxing TANG ([email protected])

- HW 3 grading & appealing

- Peng YE ([email protected])

- HW 4 grading & appealing

- Jingcan CHEN ([email protected])

- Lab

- Peter CHUNG ([email protected])

- Teaching PA-related labs (Lab 3,5,8)

- Zhonghan CHEN ([email protected])

- Teaching Lab 1,2

- Yijun SUN ([email protected])

- Teaching Lab 4,6

- Junze LI ([email protected])

- Teaching Lab 7,9

- Peter CHUNG ([email protected])

- Project

Grading scheme

| Assessment Task | Percentage |

|---|---|

| Mid-Term | 20% |

| HW | 20% |

| Projects | 30% |

| Final Exam | 30% |

Required texts

- Abraham Silberschatz, Peter B. Galvin, Greg Gagne John Wiley & Sons Ltd, April 2018. Operating System Concepts, 10th Edition, MIT Press.

Optional resources

- Remzi Arpaci-Dusseau & Andrea Arpaci-Dusseau. Operating Systems: Three Easy Pieces

COMP 3511: Lecture 1

Date: 2024-09-26 13:41:44

Reviewed:

Topic / Chapter: Basic OS

summary

❓Questions

Notes

Introduction to the Course

-

Introduction

- learning goals

- fundamental principles, strategies, and algorithms

- for design & implementation of operating systems

- analyze & evaluate OS functions

- understand basic structure of OS kernel

- and identify relationship between various subsystems

- identify typical events / alerts / symptoms

- indicating potential OS problems

- design & implement programs for basic OS functions and algorithms

- fundamental principles, strategies, and algorithms

- course overview

- overview (4)

- basic OS concept (2)

- system architecture (2)

- process and thread (12)

- process and thread (4)

- CPU scheduling (4)

- synchronization and synchronization example (2)

- deadlock (2)

- memory and storage (8)

- memory management (2)

- virtual memory (3)

- secondary storage (1)

- file systems and implementation (2)

- protection (1)

- protection (1)

- security - optional

- overview (4)

- learning goals

Operating System

-

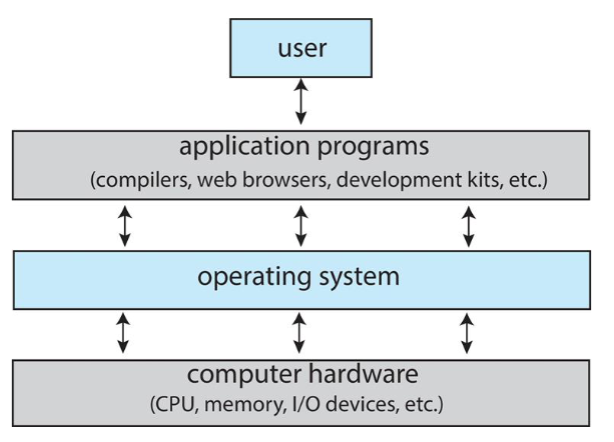

Operating system components

- users: people, machine, other computers / devices

- application programs: defining ways how system resources are used

- to solve user problems

- e.g. editors, compilers, web browsers, video games, etc.

- operating system: controls & coordinates use of computing resources

- among various application & different users

- hardware: basic computing resources: CPU, memory, IO devices

-

Operating system introduction

- OS: a (complex) program working as intermediary between: user-applications and hardware

- iOS, MS Window, Android, Linux, etc.

- OS goals:

- execute user programs & solve user problems easier

- make computer system: convenient to use

- manage & use computer hardware efficiently

- user view:

- convenience, easy of use, good performance & utility

- user: doesn't care about resource utilization & efficiency

- system view:

- OS: resource allocator & control program

- no universally accepted definition, but:

- "everything vendors ships when you order an OS"

- OS as a resource allocator

- managing both SW & HW resources

- decides among conflicting requests: for efficient & fair resource use

- OS as a control program

- controls: execution of programs, prevent errors & improper use of computer

- OS: manages & controls hardware

- helps to facilitate (user) programs to run

- OS: a (complex) program working as intermediary between: user-applications and hardware

-

OS breakdown

- kernel: one program always running on computer

- provides essential functionalities

- middleware: set of SW frameworks: provide additional services to app dev

- e.g. DB, multimedia, graphics

- popular in mobile OS

- all else:

- system programs

- application programs

- OS includes:

- always running kernel

- middleware frameworks: for easy app development & additional feature

- system programs: aid in managing system while running

- kernel: one program always running on computer

-

Operating system tasks

- depends on: PoV (user vs. system) and target devices

- shared computers (mainframe, minicomputer)

- OS: need to keep all users satisfied

- performance vs. fairness

- individual systems (e.g. workstations) with dedicate resources

- performance > fairness; may use shared resource from servers

- mobile devices: resource constrained

- target specific user interfaces (touch screen, voice detection)

- optimized for usability & battery life

- computers / computing devices w/ little-no UI

- embedded systems: present within home devices, automobiles, etc.

- run real-time OS

- design: run primarily without user intervention

- embedded systems: present within home devices, automobiles, etc.

Computer System Organization

-

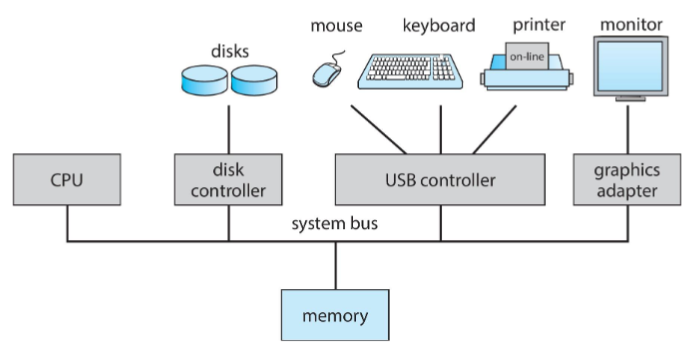

Computer system organization

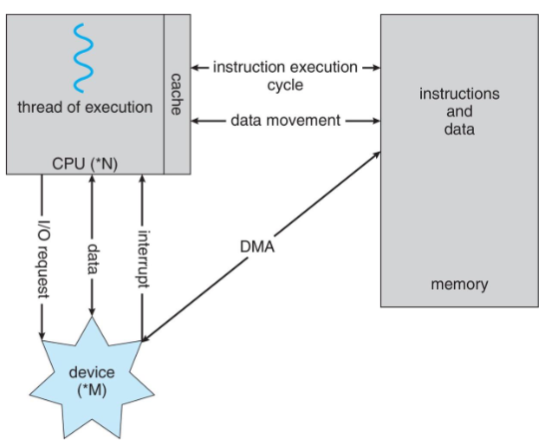

- one or more CPU cores & device controllers: connected through common bus

- providing access to shared memory

- goal: concurrent execution of CPUs and devices

- compete for memory cycles w/ shared bus

- compete for memory cycles w/ shared bus

- one or more CPU cores & device controllers: connected through common bus

-

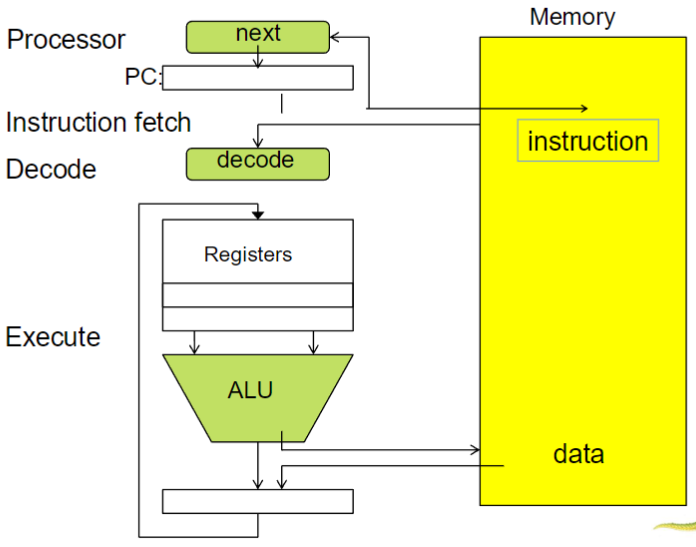

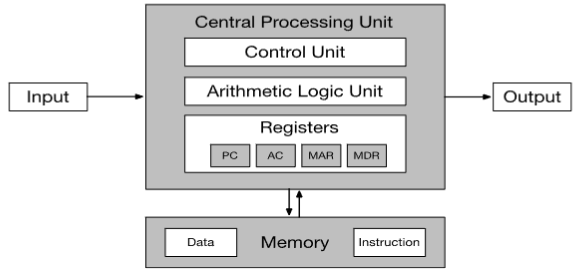

Von Neumann architecture: composition

- CPU: contains ALU & processor registers

- programmer counter (PC)

- accumulator (AC)

- memory address register (MAR)

- memory data register (MDR)

- control unit: contains

- instruction register (IR)

- program counter (PC)

- memory: w/ data and instructions

- as well as caches

- external mass storage / secondary storage for more space

- input-output mechanism

- CPU: contains ALU & processor registers

-

Von Neumann architecture

- steps

- fetch instruction

- decode instruction

- fetch data

- execute instruction

- write back (if any)

diagrams

- steps

COMP 3511: Lecture 2

Date: 2024-09-29 21:40:37

Reviewed:

Topic / Chapter: Interrupts & Storage

summary

❓Questions

Notes

Interrupts

-

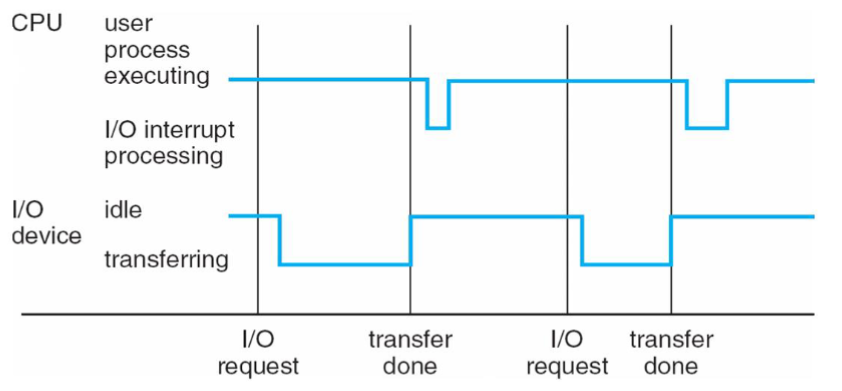

IO

- IO device & CPU: execute concurrently & asynchronously

- ~= event driven?

- each device controller: in charge of a particular device

- w/ local buffer

- responsible for moving data between:

- peripheral devices it control

- & local buffer storage

- IO operation: from device -> local buffer of controller

- CPU: moves data from/to main memory local buffers

- typically: for slow devices (keyboard, mouse)

- DMA controller: to move data for fast devices (e.g. disks)

- device controller: informs CPU operation termination (completion / fail) by: interrupt

- i.e. requires CPU attention

- for input: i.e. data is available in local buffer

- for output: i.e. IO operation has been completed

- IO device & CPU: execute concurrently & asynchronously

-

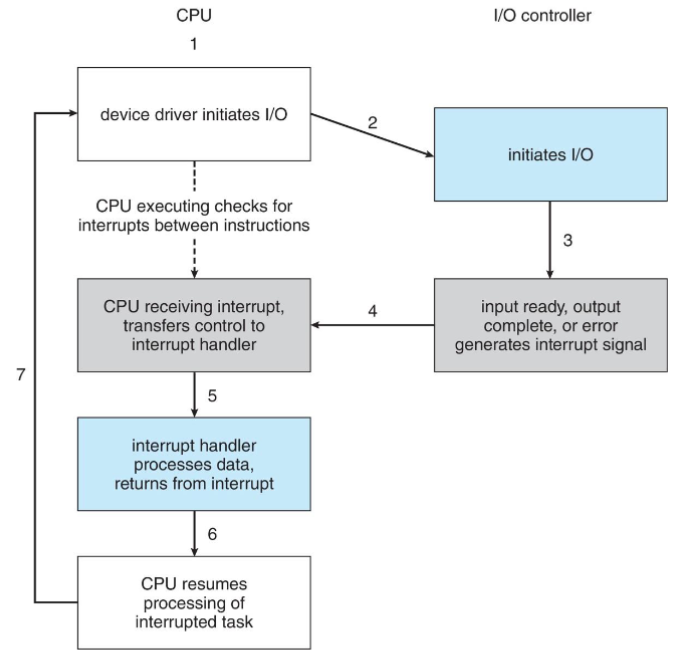

Interrupt cycle

-

Interrupt timeline

- CPU & device: execute concurrently

- IO device: may trigger interrupt by sending CPU a signal

- CPU handles interrupt (i.e. transfer control to interrupt handler)

- then returns to interrupted instruction

-

Interrupt functions

- interrupts: widely used in modern OS systems

- for asynchronous event handling

- e.g. device controllers hardware faults

- all modern OS: interrupt-driven

- hundreds of interrupts occur per sec

- CPU: extremely fast ()

- hundreds of interrupts occur per sec

- interrupt: transfers control to:

- interrupt service routine / interrupt handler

- part of kernel code; OS runs to handle a specific interrupt

- also: implements a interrupt priority levels

- high-priority interrupt: can preempt execution of low-priority

- trap / exception: SW-generated interrupt caused either by:

- error (e.g. div by 0) / user request (e.g. some syscall)

- interrupts: widely used in modern OS systems

Storage

-

Bits & Units

- bit: basic unit of computer storage (0/1)

- byte: 8 bits

- smallest chunk of storage from most computers

- word: computer architecture's native unit of data

- nowadays: mostly

1 word = 8 byte = 64 bits

- nowadays: mostly

- larger units

- kilobyte bytes

- megabyte bytes

- gigabyte bytes

- terabyte bytes

- petabyte bytes

- often rounded up in for computer manufacturers

- however, network measurements: given in bits, not bytes

-

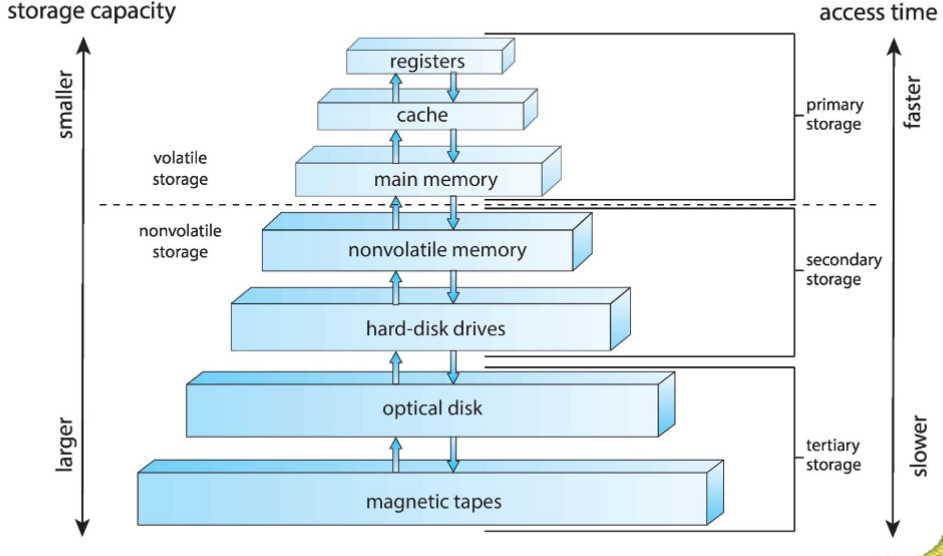

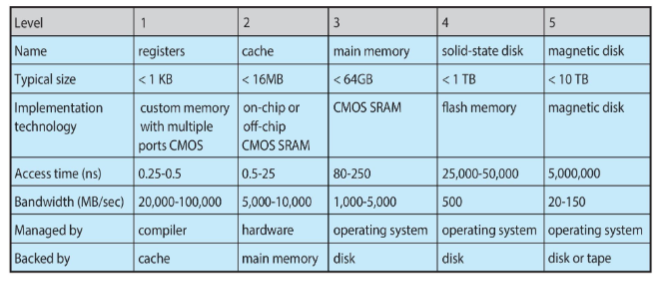

Storage hierarchy

- storage systems: organized in hierarchy

- w/ speed, cost / unit, capacity, and volatility (non-volatile vs. volatile)

- usually, higher: faster & more expensive, but smaller & more volatile

- storage systems: organized in hierarchy

-

Memory

- main memory: directly accessible by CPU

- only large storage media to be so

- volatile, often random-access memory

- in the form of: dynamic ram (DRAM)

- basic operations:

load&storeto specific memory address- that is: byte addressable

- each addr: refers to 1 byte in memory

- computers: also use other forms of memory

- main memory: directly accessible by CPU

-

Second storage

- i.e. extension of main memory

- w/ non-volatile storage capacity

- data: stored permanently

- most common such storage:

- hard disk drives (HDD)

- nonvolatile memory (NVM) devices

- provides storage for both programs & data

- generally: two types

- mechanical

- HDDs, optical disks, holographic storage, magnetic tape

- generally larger & less expensive

- electrical

- flash memory, SDD, FRAM, NRAM

- electrical: often referred as NVM

- typically costly, smaller, more reliable & faster

- mechanical

-

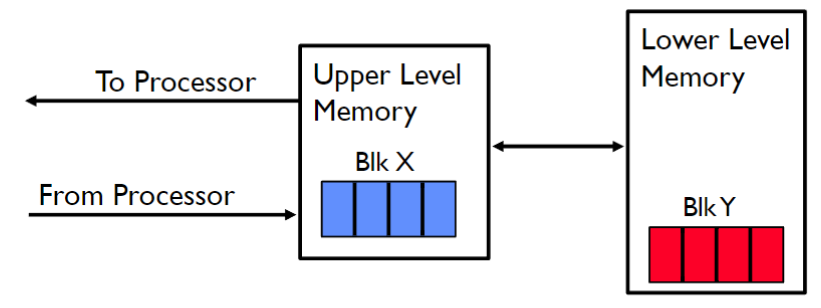

Caching

- important: performed at many levels in computers

- for memory, address translation, file blocks, file names (freq. used), file dir, network routes, etc.

- ⭐ fundamental idea: a subset of information: copied from slower to a faster storage temporarily

- i.e. make freq. used case: faster

- access: first check whether information is inside cache

hit: inside cache; information can be directly retrieved (fast)miss: not inside cache; data copied from slower storage to cache, then used (slow)

- cache: usually much smaller than storage (memory) being cached

- cache management: cache size & replacement policy

- major criteria: cache hit ratio; percentage of hit per access

- important measurement

- important: performed at many levels in computers

-

Locality: principle below caching

- temporal locality (in time)

- recently accessed items: likely to be accessed again

- spatial locality (in space)

- contiguous blocks (i.e. neighbor of recently accessed): likely to be accessed shortly

- without locality pattern (i.e. all item: accessed w/ equal prob): cache can't work

- temporal locality (in time)

COMP 3511: Lecture 3

Date: 2024-10-02 02:10:47

Reviewed:

Topic / Chapter:

summary

❓Questions

Notes

Storage / IO

-

Cache levels

-

range of timescales

name time (ns) L1 cache reference 0.5 branch mispredict 5 L2 cache reference 7 mutex lock/unlock 25 main memory reference 100 compress 1K bytes w/ Zippy 3,000 send 2K bytes w/ 1 Gbps net 20,000 read 1 MB sequentially from memory 250,000 round trip within same data center 500,000 disk seek 10,000,000 read 1 MB sequentially from disk 20,000,000 send packet CA->Netherlands->CA 150,000,000

-

-

IO subsystem

- OS: accommodate wide variety of devices

- w/ different capabilities, control-bit def., protocol to interact

- OS: enables standard, uniform way of interaction w/ IO

- involving abstraction, encapsulation, and software layering

- encapsulate device behavior in generic classes

- each: accessed through interface: standardized set of functions

- one purpose of OS: hide gap between hardware devices from users

- abstraction & encapsulation!

- IO subsystem: responsible for

- memory management of IO

- buffering: storing data temporarily while being transferred

- caching: storing parts of data in faster storage for performance

- spooling: overlapping / queuing of one job's output as input of other jobs

- typically: printers

- general device-driver interface

- driver for specific hardware devices

- memory management of IO

- OS: accommodate wide variety of devices

-

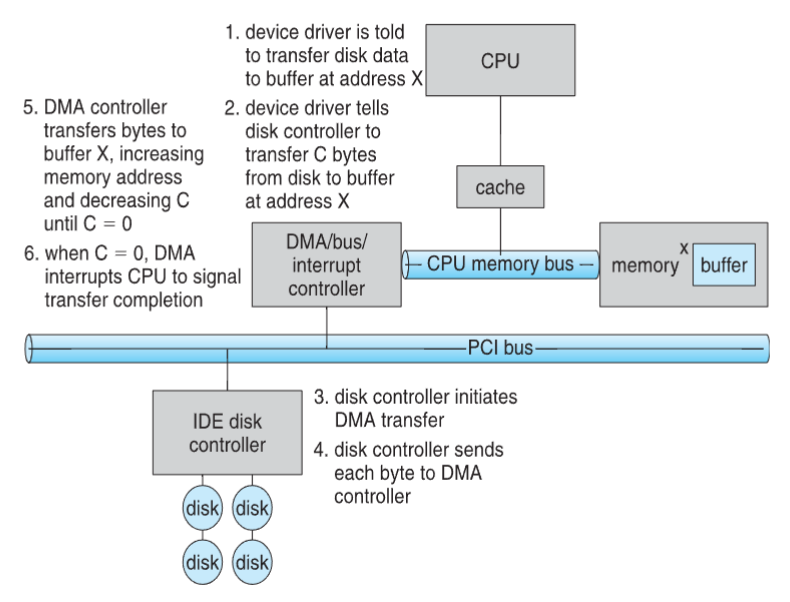

Direct memory access

- programmed IO: CPU running special IO instructions

- moving byte one-by-one

- between memory & slow devices (keyboard & mouse)

- for fast devices: programmed IO must be avoided

- instead, use direct memory access (DMA) controller

- idea: let IO device and memory transfer data directly

- bypass CPU!

- CPU / OS initialize DMA controller, and it's now responsible for moving data

- benefit: CPU freed from slow IO operations

- OS writes DMA command block into memory

- preparation

- source & destination addresses

- read || write mode

- no. of bytes to be transferred

- execution

- write location of command block to DMA controller

- bus mastering of DMA controller

- i.e. grab bus from CPA

- send interrupt to CPU for signaling completion

- preparation

DMA transfer diagram

- programmed IO: CPU running special IO instructions

Processors

-

Single processor system

- traditionally: most system w/ single processor, single CPU, single processing core

- core: executes instructions & registers for storing data locally

- processing core / CPU: capable of executing a general-purpose instruction set

- such systems: w/ other special-purpose processors

- e.g. device-specific processors (for disk) and graphic controllers (GPU)

- those: w/ limited instruction set

- not executing instructions from user processes

- traditionally: most system w/ single processor, single CPU, single processing core

-

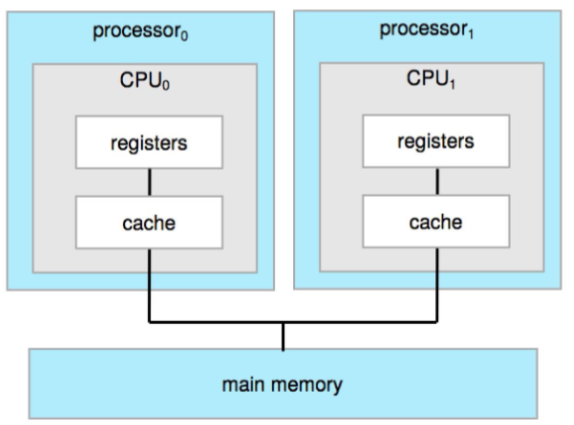

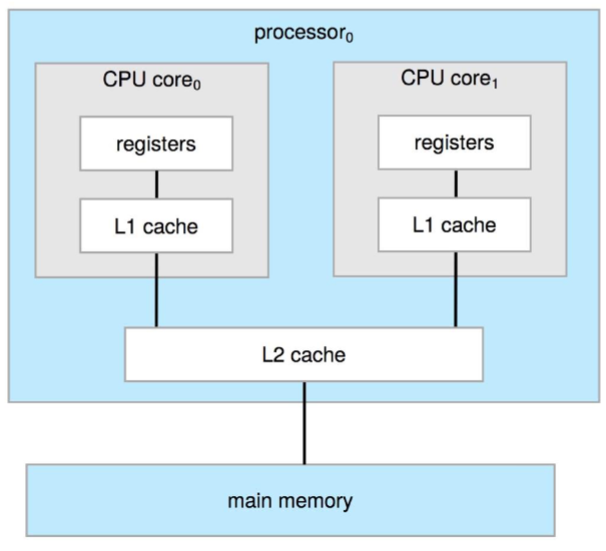

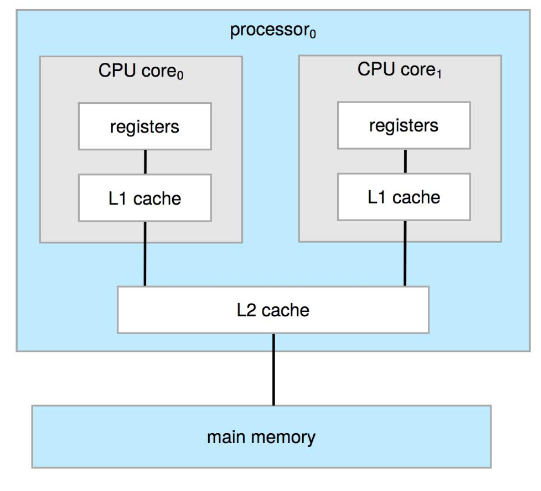

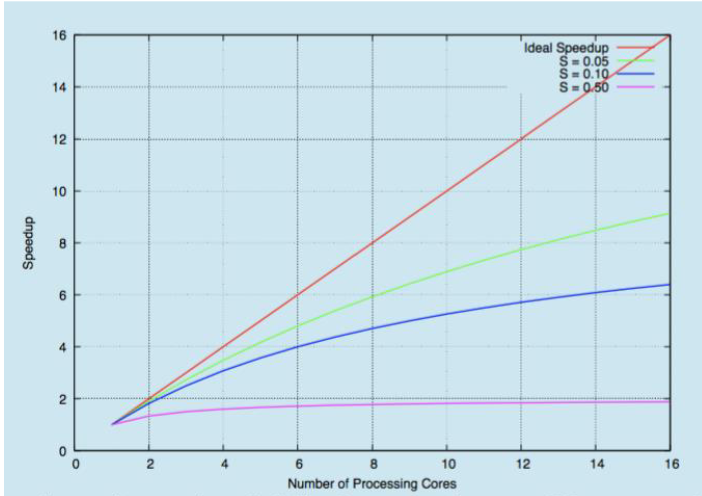

Multiprocessor system

- modern computer (mobile to servers): dominated by multiprocessors system

- traditionally: 2+ processors and each w/ single-core CPU

- speedup ratio: less than no. of processors

- due to overhead - contention for shared resources

- e.g. bus / memory

- multiprocessor advantage

- increased throughput: more computing capability

- economy of scale: share other devices (e.g. IO devices)

- increased reliability: graceful degradation / fault tolerance

- two types of multiprocessor systems

- asymmetric multiprocessing

- master-slave manner

- master processor: assign specific tasks to slaves

- master: handles IO

- symmetric multiprocessing

- each processor: performs all tasks

- including OS functions & user processes

- aka SMP

- each processor: w/ own set of registers and private || local cache

- but all processors: share physical memory via system bus

- each processor: performs all tasks

- asymmetric multiprocessing

- multicore: multiple computing cores inside a single physical chip

- faster intra-chip communication than inter-chip

- using significantly less power: important for mobile / laptops

- modern computer (mobile to servers): dominated by multiprocessors system

-

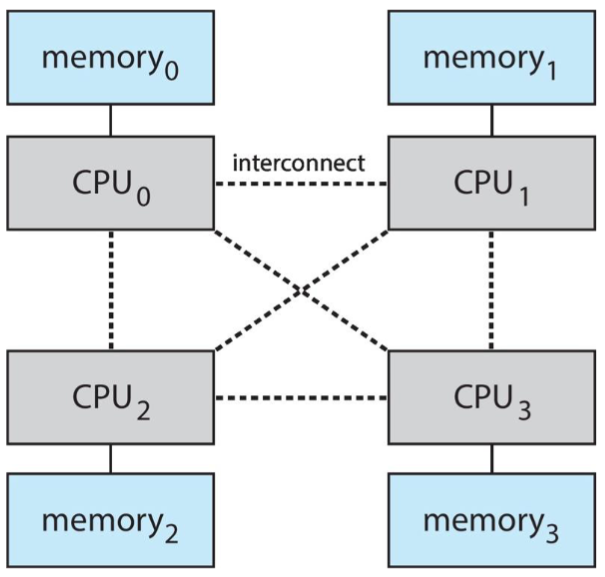

Non-Uniform Memory Access (NUMA)

- adding more CPU to multiprocessor: may not scale / help

- as system bus can be a bottleneck

- alternative: provide each CPU local memory

- accessed via small & fast local bus

- CPUs: connected by shared system interconnect

- all CPU: share one physical memory address space

- such approach: non-uniform memory access (NUMA)

- potential drawback

- increased latency when CPU must access remote memory across system interconnect

- e.g. memory of another CPU?

- scheduling & memory management implication

- increased latency when CPU must access remote memory across system interconnect

-

Computer system component

- CPU: hardware executing instructions

- processor: physical chip containing one or more CPUs

- core: basic computation unit of CPU

- or: component executing instructions / registers for storing data locally

- multicore: including multiple computing cores on a single physical processor chip

- multiprocessor system: including multiple processors

OS Structure

-

OS structure

- 2 common characteristics exist in all modern OS

- multiprogramming: for efficiency

- aka batch system

- single program: can't keep CPU & I/O busy

- thus multi-program: organize multiple jobs

- s.t. CPU will not stay idle

- in mainframe PC: jobs being submitted remotely & queued

- selected & ran via jab scheduling: load into the memory

- timesharing: logical extension of multiprogramming

- aka multitasking

- CPU: switches frequently between jobs

- s.t. users: can interact w/ each job while running

- enables: interactive computing

- characteristics

- response time:

- each user: w/ at least one program executing in memory (= process)

- if several jobs ready to run: CPU scheduling needed

- if: processes don't fit in memory

- swapping technique required

- moving some program in and out of memory during execution

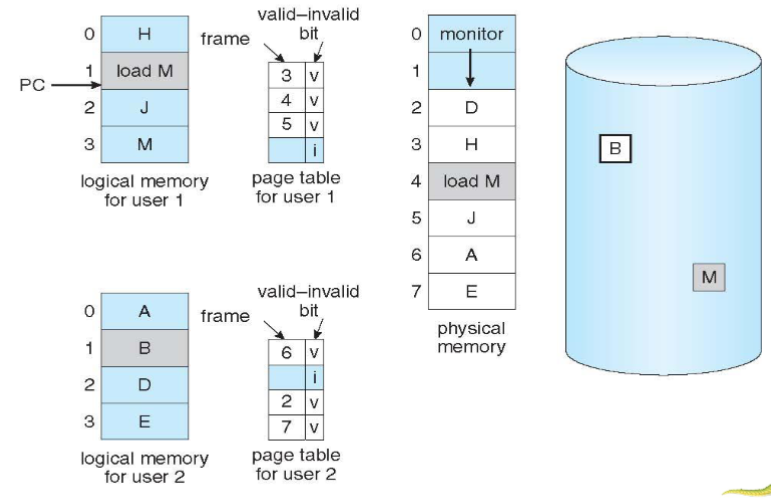

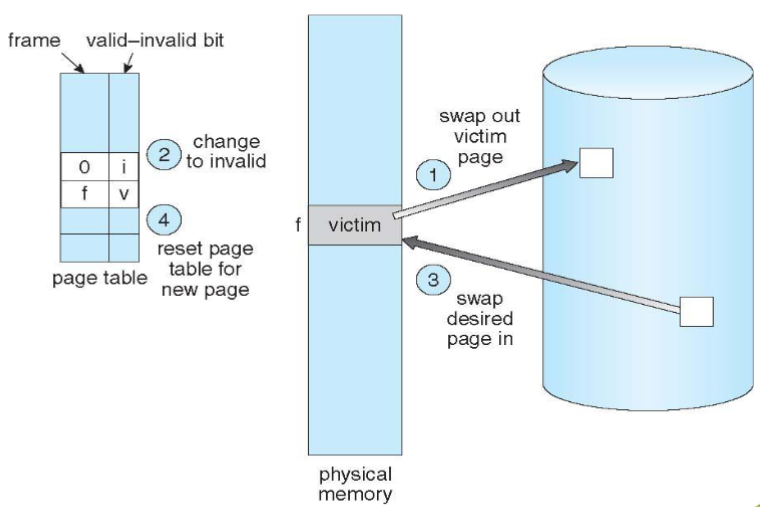

- virtual memory: allows execution of processes that are not completely in memory

Virtualization

-

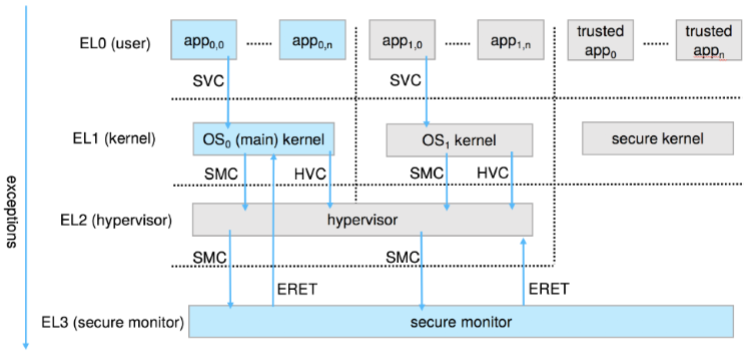

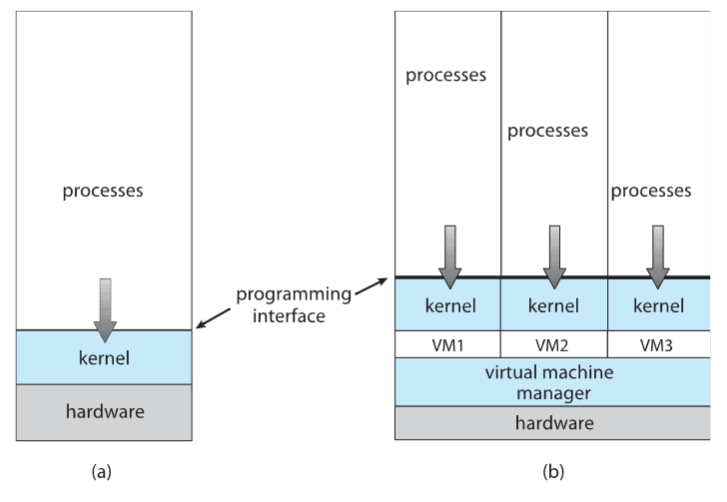

Virtualization

- virtualization: abstracts hardware of a single computer

- into multiple different execution environments

- creating illusion: each user / program is running on their "private computer"

- creates: virtual system = virtual machine (VM) to run OS / applications over

- allows: an OS to run as an app within another OS

- 👨🏫 growing industry!

- allows: an OS to run as an app within another OS

- into multiple different execution environments

- components

- host: underlying hardware system

- virtual machine manager (VMM): creates & runs VM by providing interface

- s.t. it's identical to the host

- aka hypervisor

- guest: process provided w/ virtual copy of host

- i.e. program. often: an OS (guest OS)

- allows: a single physical machine to run multiple OS concurrently

- each on their own VM

system models

- virtualization: abstracts hardware of a single computer

-

Virtualization history

- virtualization: OS natively compiled for CPU, running guest OSes

- originally: designed in IBM mainframes (1972)

- for: allowing multiple users to run tasks concurrently

- under: system designed for a single use

- or: sharing batch-oriented system

- for: allowing multiple users to run tasks concurrently

- VMware: runs one or more guest copies of Windows on Intel x86 CPU

- virtual machine manager (VMM): provides environment for programs

- that is: essentially identical to original machine (interface)

- programs on such environment: only experience minor performance decrease (due to extra layers)

- VMM: in complete control of system resources

- originally: designed in IBM mainframes (1972)

- by late 1990s: Intel CPUs on general purpose PCs: fast enough for virtualization

- technology: treated by , which are still relevant today

- virtualization: expended to many OSes, CPUs, VMMs

- virtualization: OS natively compiled for CPU, running guest OSes

COMP 3511: Lecture 4

Date:

Reviewed:

Topic / Chapter:

summary

❓Questions

Notes

Cloud Computing

-

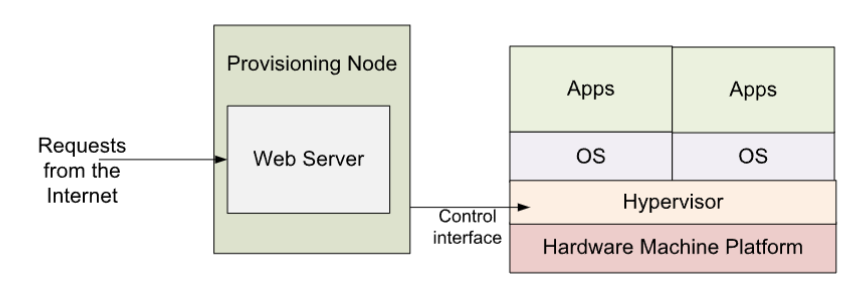

Cloud computing & virtualization

- delivers: computing, storage, or apps as a service over a network

- logical extension of virtualization: combination of virtualization & communication

- Amazon EC2: w/ millions of servers, and tens of millions of VMs

- petabytes of storage available across the Internet

- payment: based on usage

- Amazon EC2: w/ millions of servers, and tens of millions of VMs

-

Cloud types

- public cloud: available vis Internet to anyone willing to pay

- private: run by company for internal use

- hybrid cloud: includes both public & private components

- software as a service (SaaS): provides one / more apps via Internet (web based application)

- no installation required

- platform as a service (PaaS): provides SW stack ready for application via Internet

- e.g. database server, Google Apps Engine, Heroku

- infrastructure as a service (IaaS): provides servers / storage over Internet

- e.g. VM instances, cloud bucket

- other services: also appearing

- e.g. machine learning as a service (MaaS)

Free & Open Source

-

Free and open source OS

- OS: often made available in source code, rather than just binary

- yet: MS Windows: closed source

- idea: started by Free Software Foundation (FSF) w/ "copyleft" GNU Public License (GPL)

- free software != open-source software

- free software: licensed to allow no-cost use, redistribution, modification

- in addition to public source

- open source: not necessarily w/ such license

- popular examples:

- GNU / Linux

- FreeBSD UNIX (and core of MAx OS X - Darwin)

- Solaris

- open source code: arguable more secure

- more programmers to contribute & check

- a better learning tool for us

- OS: often made available in source code, rather than just binary

OS Services

-

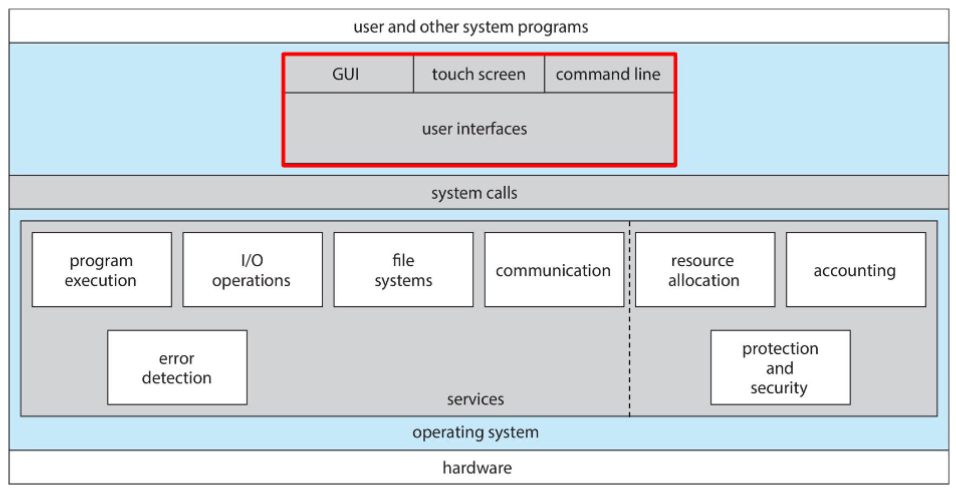

OS services

- OS: provides environment for execution of programs

- and other services to programs & users

- set of OS services (OS functions) helpful to user

- user interface: almost all OS has a user interface (UI)

- command line (CLU), graphics (GUI), touch-screen

- program execution: system: must be able to load program into memory

- and run the program & end execution

- either normally or abnormally (= error)

- and run the program & end execution

- IO operations: running program: may require IO

- might involve: files / IO device

- ⭐ file-system manipulation: programs: need to read & write files and directories

- in additions to: search, create, and delete

- and further: listing file information / permission management

- communications: processes: may exchange information (on same computer, or over a network)

- via: shared memory or through message passing (packets: moved by the OS)

- error detections: OS needs to be constantly aware of possible errors

- may occur in CPU, memory, IO devices, user programs

- for each error type: OS must take appropriate actions

- to ensure: correct & consistent computing

- debugging facilities: can greatly enhance the user's and programmer's abilities

- to efficiently use the system

- user interface: almost all OS has a user interface (UI)

- other set of OS services: ensures efficient operation of system (via resource sharing)

- resource allocation: within multiple concurrent users & jobs

- resources: must be allocate to each of them

- e.g. CPU cycles, memory, file storage, IO devices

- resources: must be allocate to each of them

- logging: keep track of: which users use how much

- and what kinds of computer resources

- protection, security: owners of information stored in multi-user / networked system: might want control on information use

- e.g. permission control

- concurrent processes: should not interfere with each other

- protection: involving ensuring that all accesses to system resources: controlled

- security of the system from outsiders: requires user authentication

- extends to: defending external IO devices from invalid access attempts

- resource allocation: within multiple concurrent users & jobs

- OS: provides environment for execution of programs

-

User OS interface: CLI

- generally: three approaches for UI exists

- CLI / command interpreter - directly enters commands to OS

- GUI / touch screen: allows user to interface w/ the OS

- CLI: allows direct command entry

- sometimes: implemented in kernel

- sometimes by system programs

- sometimes: multiple flavors implemented: shells in Unix / Linux

- primary functionality of shell:

- fetching command from user

- interpreting it

- executing it

- UNIX and Linux systems: provide different version of shells

- e.g. C shell, Bourne-Again, Korn, et.

- sometimes: implemented in kernel

- generally: three approaches for UI exists

-

User OS interface: GUI

- user-friendly desktop metaphor interface

- usually: mouse, keyboard, and monitor

- icons representing files, programs, directories, system functions, etc.

- various mouse buttons over objects: cause various actions

- e.g. provide information, execute function, open directory, etc.

- invented at: Xerox PARC earlier 1970s (near Stanford)

- first wide use: in Apple Macintosh (1984)

- many systems now: provide both CLI and GUI interfaces

- MS Windows: using GUI w/ CLI command shell

- Apple Max OS X: Aqua GUI interface

- w/ UNIX kernel underneath, as well as shells

- Unix and Linux: w/ CLI and optional GUI interfaces (KDE, GNOME)

- user-friendly desktop metaphor interface

-

User OS interface: touchscreen

- touch screen requires: new interfaces

- mouse: not feasible / desired

- actions & selection: based on gestures (no mouse move)

- virtual (on-screen) keyboard for text

- voice command: iPhone's Siri, etc.

- touch screen requires: new interfaces

Syscall

-

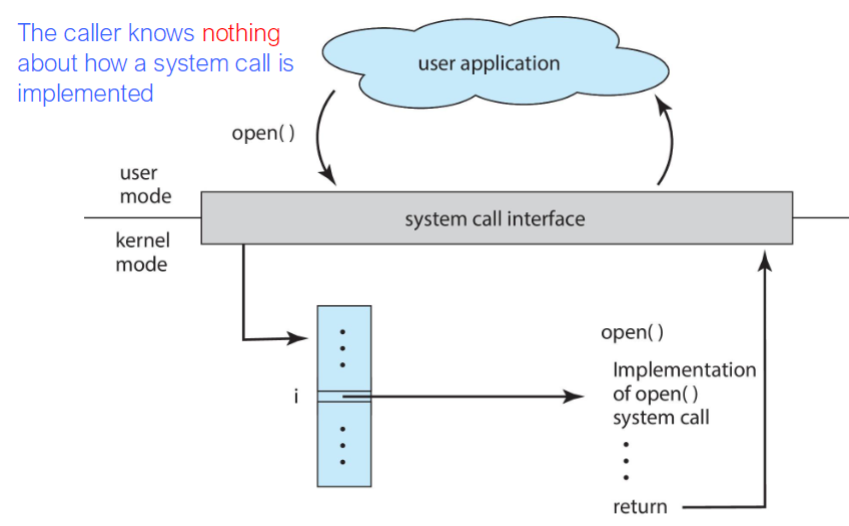

Syscall

- system call: programming interface to the services provided by the OS

- syscall: generally available as functions, written in a high-level language (C/C++)

- certain low-level tasks: written in assembly languages (accessing hardware)

- e.g.:

cp in.txt out.txtinvolves multiple syscall- input file name, accept input, open input file, create out file, etc.

-

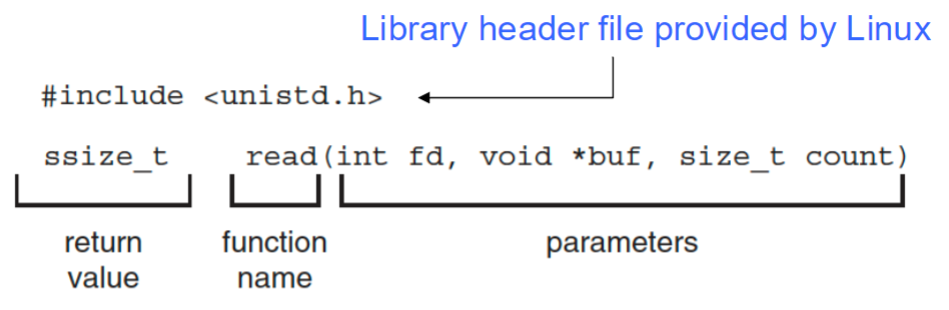

API

- application program interface (API): specifying a set of functions, available for app programmers to use

- including: parameters passed to functions

- and expected return values

- including: parameters passed to functions

- function making up API: typically invoke actual syscalls, on behalf of programmer

- programmer: access APIs via library provided by the OS

- in UNIX / Linux:

libcfor C programs

- in UNIX / Linux:

- three most common APIs:

- Win32 API for Windows systems

- POSIX API for POSIX-based systems (UNIX, Linux, and Mac OS X)

- Java API for JVM

- standard API example

- parameters

int fd: file descriptor to be readvoid *buf: buffer the data will be read intosize_t count: maximum number of bytes to be read: into the buffer

- return value

0: indicating end of file-1: indicating error

- advantages: why use API over syscall?

- program portability: programmer w/ API: expect program to run on any system supporting same API

- syscall implementation: might vary from machine to machine

- abstraction: hiding complex details of the syscall from users

- actual syscall: often more details / difficult to work with

- caller of API: doesn't need to know implementation of syscall

- caller: simply obey API format / understand what does API do

- program portability: programmer w/ API: expect program to run on any system supporting same API

- application program interface (API): specifying a set of functions, available for app programmers to use

-

Syscall implementation

- for most programming languages: run-time environment (RTE) provides syscall interface

- serving as link to syscalls, made available by OS

- RTE: set of functions built into libraries

- each syscall: associated w/ identity number

- syscall interface: maintains a table indexed according to these numbers

- syscall interface: functions

- intercept: function calls in API

- invoke: necessary syscall within OS

- return: status of syscall / return values if any

- API-syscall-OS relationship

- for most programming languages: run-time environment (RTE) provides syscall interface

-

Syscall parameter passing

- often: more info than just syscall identity is needed

- exact type / amount of information: vary for OS / call

- 👨🏫 although we will spend some time on a syscall without parameter (

fork())

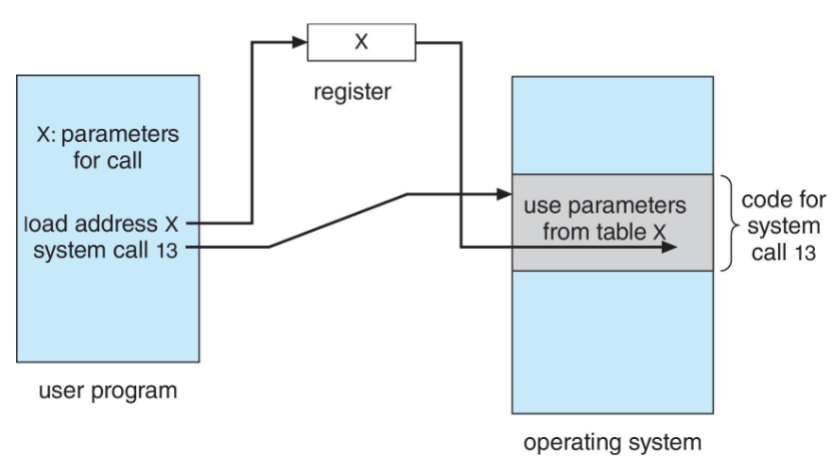

- three general methods used to pass parameters from user programs to OS

- simplest: pass parameters in registers

- block method: parameters stored in block / table / memory

- and address of block: passed as a parameter in a register

- stack method: parameters placed / pushed onto stack by the program

- popped off the stack by the OS

- block & stack: no limitation on number / length of parameters!

- parameter passing: via table

- often: more info than just syscall identity is needed

-

Types of syscalls

- process control

- create / terminate process

- end, abort

- load, execute

- get / set process attributes

- wait for time

- wait even, signal event

- allocate / free memory

- dump memory if error

- debugger for determining bugs, single step execution

- locks for managing access to shared data between processes

- file management

- create / delete file

- open / close file

- read, write, reposition

- get & set file attributes

- device management

- request / release device

- read, write, reposition

- get / set device attributes

- logically attach / detach devices

- information maintenance

- get & set time / data

- get & set system data

- get & set process, file, device attributes

- communications

- create, delete communication connection

- send, receive messages: to host name / process name

- if message parsing model

- shared memory model: create & gain access to memory regions

- transfer status information

- attach & detach remote devices

- protection

- control access to resources / get and set permissions

- allow / deny user access

- process control

-

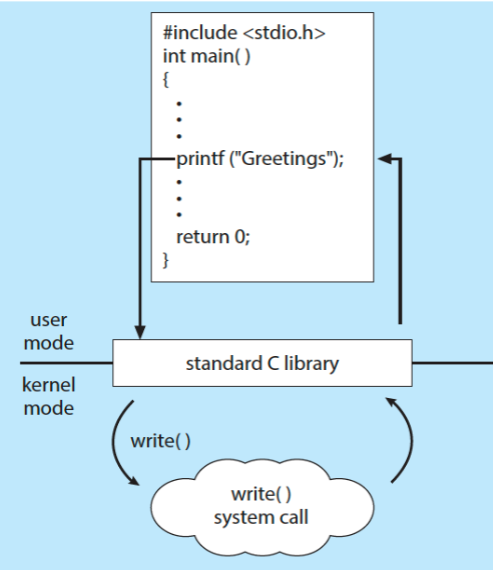

Examples

task Windows Unix process control CreateProcess()fork()ExitProcess()exit()WaitForSingleObject()wait()file management CreateFile()open()ReadFile()read()WriteFile()write()CloseHandle()close()device management SetConsoleMode()ioctl()ReadConsole()read()WriteConsole()write()information maintenance GetCurrentProcessID()getpid()SetTimer()alarm()Sleep()sleep()communications CreatePipe()pipe()CreateFileMapping()shm_open()MapViewOfFile()mmap()protection SetFileSecurity()chmod()InitializeSecurityDescriptor()umask()SetSecurityDescriptorGroup()chown()- standard C library example

- program invoking

printf()library call- leads to

write()syscall, etc.

- leads to

- program invoking

- standard C library example

System Programs

-

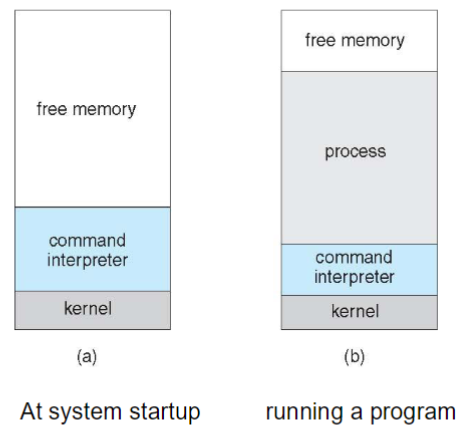

MS-DOS example

- characteristics

- single tasking

shellinvoked when system booted- simple to run a program

- no process created

- single memory space

- loads program into memory

- overwriting all: but the kernel

- program exit =>

shellreloaded

- characteristics

-

System programs

- another aspect of modern computer system: collection of system services

- system services: provide convenient environment for program development / execution

- aka system programs / utilities

- some: simply user interface to syscalls

- others: considerably more complex

- view of OS seen by most users: defined by application & system programs

- not the actual syscalls

- e.g. GUI featuring mouse-and-windows interface vs. UNIX shell

- same set of system calls

- yet, syscalls appear very differently & act in different way (from user)

-

List of system programs

- file management

- create, delete, copy, rename, print, dump, list

- and generally manipulate files & directories

- status information

- some: ask system for data, time, amount of available memory, disk space, number of users, etc.

- others: provide detailed performance, logging, debugging information

- file modification

- text editors to create & modify files

- special commands to search file contents / perform transformation of the text

- program-language support

- compilers, assemblers, debuggers, and interpreters

- for common programming languages (C, C++, Java, Python)

- often provided

- compilers, assemblers, debuggers, and interpreters

- program loading & execution

- absolute loaders, relocatable loaders, linkage editors, overlay-loaders, debugging systems

- for higher-level and machine language

- absolute loaders, relocatable loaders, linkage editors, overlay-loaders, debugging systems

- communications: providing mechanism for creating virtual connections

- among processes, users, and computer systems

- allowing users to:

- send messages to one another's screens

- browse web pages

- send electronic-mail messages

- log in remotely

- transfer files from one machine to another

- background services

- launch at boot time

- some: terminate after completing task

- some: continue to run until system terminates

- aka: services, subsystems, daemons

- provide: facilities like disk checking, process scheduling, error logging

- launch at boot time

- application programs

- not part of the OS

- launched by command line, mouse click, finger poke, etc.

- examples: web browsers, word processors, text formatters, spreadsheet, DB systems, games ...

- file management

-

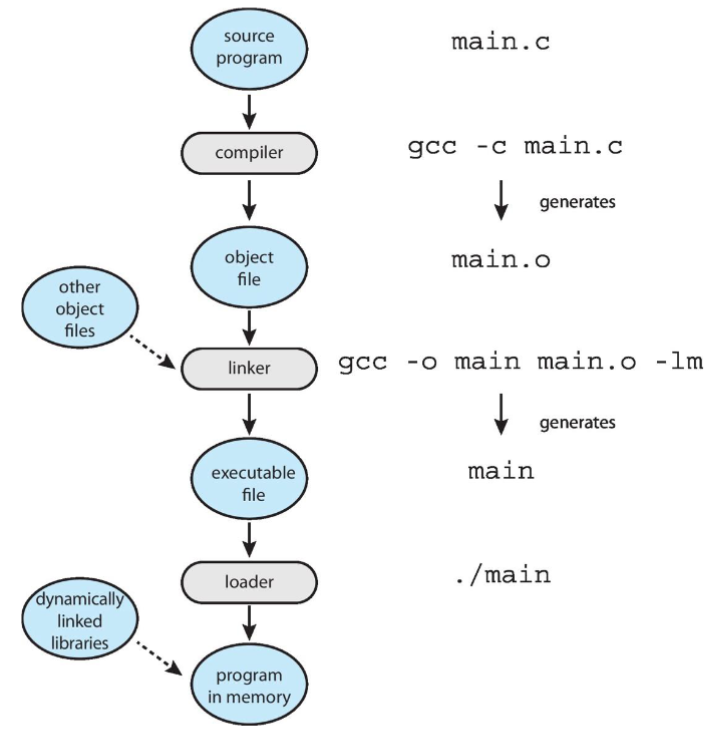

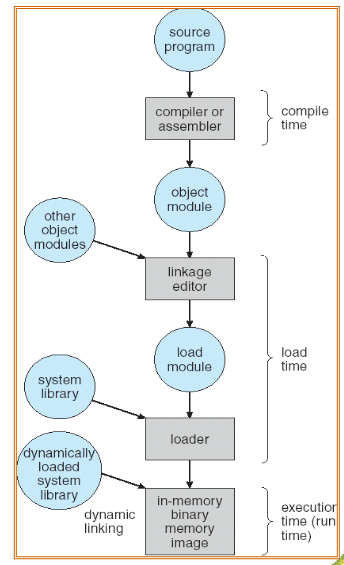

Linkers and loaders

- source codes: compiled into object files

- designed to be loaded into any physical memory location

- relocatable object files

- linker: combines object files into a single binary executable file

- programs: reside on secondary storage as binary executable

- must be brought into memory by loader to be executed

- relocation: assigns final addresses to program parts

- adjusts code & data in program to match those addresses

- modern general purpose OS: don't link libraries into executables statically

- instead: using dynamically linked libraries (aka DLLs in Windows)

- loaded when needed, and shared by all programs using the same version of that library

- loaded only once!

- loaded when needed, and shared by all programs using the same version of that library

- object files / executable files: often w/ standard formats

- machine codes, symbolic tables

- thus: OS knows how to load & start them

- instead: using dynamically linked libraries (aka DLLs in Windows)

- role of the linker and loader

- source codes: compiled into object files

Operating System Design and Implementation

-

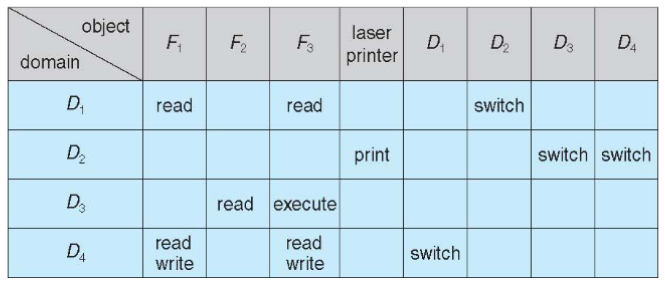

Protection

- protection: internal problem

- security: considering both the system & surrounding environment of is

- OS: must protect itself from user programs

Reliability: compromising the OS: generally causes it to crashSecurity: limit the scope of what processes can doPrivacy: limit each process to the data it has access permissionFairness: each user / process: should be limited to its "appropriate share" of system resources

- system protection features: guided by

- principle of need-to-know

- implement mechanisms to enforce: principle of least privilege

- minimum possible permission to do the job!

- computer systems contain object that must be protected from misuse

- hardware: memory, CPU time, IO devices

- software: files, programs, semaphores

- protection: internal problem

COMP 3511: Lecture 5

Date: 2024-09-17 14:56:54

Reviewed:

Topic / Chapter:

summary

❓Questions

Notes

Operating System Design and Implementation

-

Design and implementation of OS

- not solvable / no optimal solution

- characteristics

- internal structure: varies widely

- even at high level: design affected by choice of hardware / system type, etc.

- desktop / laptop / mobile / distributed / real- tie

- design: starts with specifying goals & specifications

- user goals: OS being convenient to use

- and ease to learn & use

- reliable, safe, fast

- system goals: easy to design, implement, and maintain

- and flexible, reliable (hopefully) error-free & efficient

- user goals: OS being convenient to use

- highly creative task of SWE

-

Important principle ⭐

- separation of policy & mechanism

- policy: what will be done

- mechanism: how to do it?

- e.g. time construct, mechanism for ensuring CPU protection

- deciding how long the timer will be: policy decision

- e.g. time construct, mechanism for ensuring CPU protection

- 👨🏫 policy: often changes

- separation of policy from mechanism: allows flexibility even policies change

- w/ proper separation: mechanism can be used to policy decision for

- prioritizing IO-intensive program

- as well as vice versa

- w/ proper separation: mechanism can be used to policy decision for

- OS: designed w/ specific goals in mind

- which: determine OS policies

- OS: implements policies through specific mechanisms

- separation of policy & mechanism

-

Implementation

- early OSes: written in assembly (~= 50 yrs a go)

- modern OS: written in higher level language

- for some special device/ function: assembly might still be used

- but general system: written in C/C++

- frameworks: in Java, mostly

- w/ higher level language: it can be easily ported

- also, code can be write faster, compact, and easier to maintain

- speed / increased storage requirement: not a concern now

- technology advances!

Operating System Structure

-

OS structure

- general purpose OS: very large program

- various ways to structure one

- monolithic structure

- layered - specific type of modular approach

- microkernel - mach OS

- loadable kernel modules (LKMs) - modular approach

-

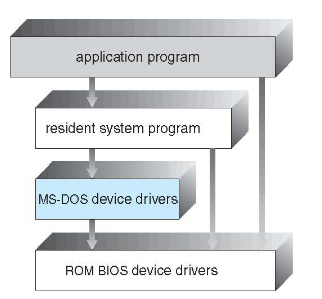

Simple structure

- the simplest possible

- structure not well-defined; started as a small / simple / limited system

- MS-DOS: written for maximum functionalist in minimum space

- not carefully divided into modules

- doesn't separate between user & os problem

- 👨🏫 NOT a dual system

- can cause many trouble nowadays (all user: can do sudo and all)

-

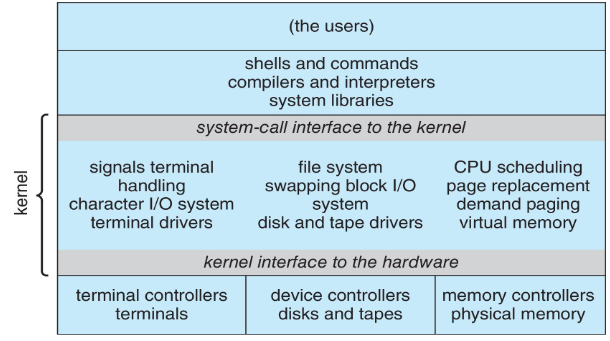

Monolithic structure: original linux

- kernel: consists of everything below the syscall interface

- and above the physical hardware

- employees multiprogramming

- 👨🏫 quite simple considering having to allocate stacks, etc.

- user: can't directly hardware

- kernel is underneath

- UNIX: initially limited by hardware functionality => limited structuring

- 👨🏫 still, it is a single program!

- a single, static binary file running in a single address space

- monolithic structure!

- 👨🏫 still, it is a single program!

- UNIX: made of two separable parts

- kernel: further separated into interfaces & device drivers

- expanded as UNIX evolves

- system programs

- kernel: further separated into interfaces & device drivers

- drawback: enormous functionality: combined to one level

- difficult to implement, debug & maintain

- advantage: distinct performance!

- no need to pass parameter, etc.

- very little overhead in syscall interface and communication, etc.

- kernel: consists of everything below the syscall interface

-

Linux system structure

- i.e. modular design

- allows kernel to be modified during run time

- based on UNIT

- still, monolithic:runs entirely in kernel mode w/ single address space

- i.e. modular design

-

Modular design

- monolithic approach: too compact and tight interaction

- why not make the tie looser?

- so that change in one module / functionality does not affect the others parts!

- 👨🏫 ideally, and hopefully!

- one method: layered approach

- e.g. file system: located above hardware

-

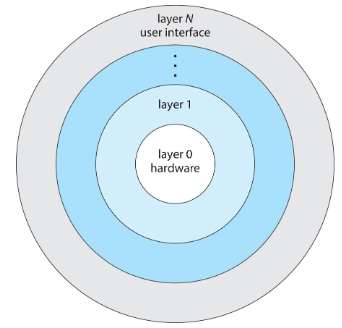

Layered approach

- OS: divided into no. of layers

- each built on top of lower layers

- layer : hardware

- layer : user interface

- each layer: made of data structure & set of functions

- that can be invoked by higher level layers

- each layer: utilized the services from a lower layer, provides a set of functions

- and offers certain services for a higher layer

- 👨🎓 linux: all in 1 layer

- information higher: layer not needing to know lower-layer's implementation

- each layers: hides existence of its own data structures / operations to higher levels

- main advantage: simplification of construction & debugging

- layered system: successfully used in other areas too

- like TCP/IP vs. hardware (networking system)

- few systems tue pure layered approach though

- overall performance will be affected

- trend: fewer layers w/ more functionality & modularization

- while avoiding complex layer definition & interactions

- OS: divided into no. of layers

-

Mixed kernel system structure

- kernel: became large & difficult to manage

- some people: wanted to make OS a core

- and store all other nonessential parts outside

- mainstream (OS): expansion

- result: much smaller kernel

- Mach: developed at CMU in mid-1980s

- modularized the kernel w/ microkernel approach

- best-known microkernel OS: Darwin, used in Mac OS X, iOS

- consisting of 2 kernels, one being Mach microkernel

- UNIX / IBM, etc. thought it's desirable

- 👨🏫 but no general consensus on what's nonessential

- but generally: minimal process, memory management and communication facility: must be in kernel

- some of the essential functions

- provide communications between programs / services in user address space

- through message passing

- problem: if app wants to access file

- it must interact w/ file server through kernel, not directly to file / file manager

- provide communications between programs / services in user address space

- advantage

- easier to extend microkernel system (without modifying the kernel)

- minimal change to kernel

- easier to post OS to ner architectures

- more secure

- drawbacks: performance

- increased system overhead

- copying & storing messages during communication => makes it slow!

- not done by the mainstream

- Window NT: w/ layered microkernel

- much worse performance thantWindow 95 / Window XP

-

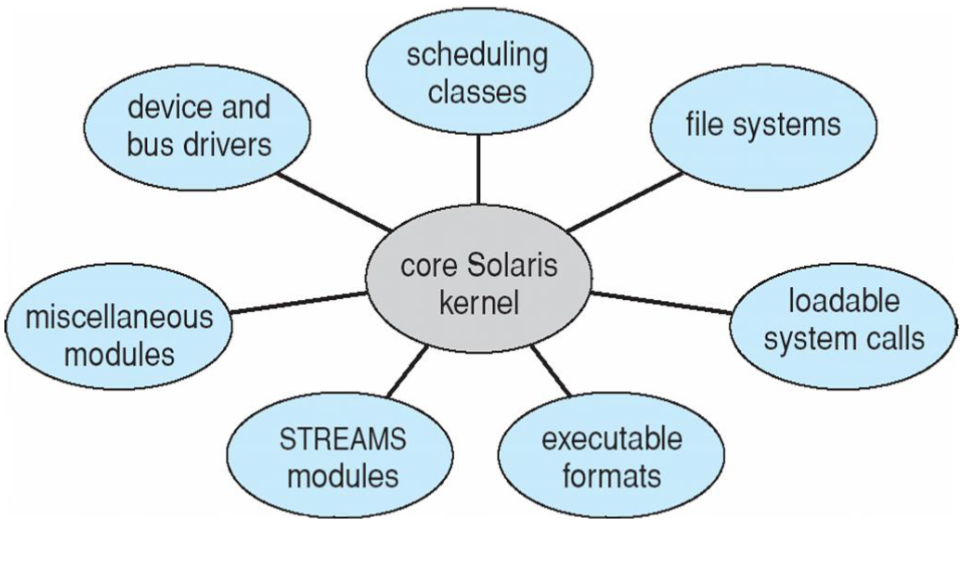

Modular approach: current methodology

- best current practice: involving loadable kernel modules (LKMs)

- i.e. programs can be added to kernel without rebooting

- once added, become a part of kernel, not user program!

- kernel: supports link to additional services

- services: can be implements & added dynamically while kernel is running

- dynamic linking: preferred to adding new features directly to kernel

- as it doesn't require recompiling kernel per every change

- resembles layer : as it has well-defined & protected interfaced

- yet, it's much more flexible

- also resembles microkernel: as primary module is only core functions and linkers

- more efficient that pure microkernel though

- linux: w/ LKMs, primarily for supporting device drivers & file systems

- LKMs: can be inserted into kernel during booting & runtime

- also can be removed during runtime!

- dynamic & modular kernel, while maintaining performance of monolithic system

- best current practice: involving loadable kernel modules (LKMs)

-

Case study: Solaris

- 👨🏫 in my days... Solaris was a dominant workstation provider

- PC wasn't a things... and we CS people used Linux

- now, the company is not computer system program though

- it worked with modular system

- 👨🏫 in my days... Solaris was a dominant workstation provider

-

Hybrid system

- most OS: combines benefit of different structures

- Linux: monolithic (in single address space), but also modular

- new functionality can be dynamically added

- windows: largely monolithic, with some microkernel behavior

- also provide support for dynamically loadable kernel modules

-

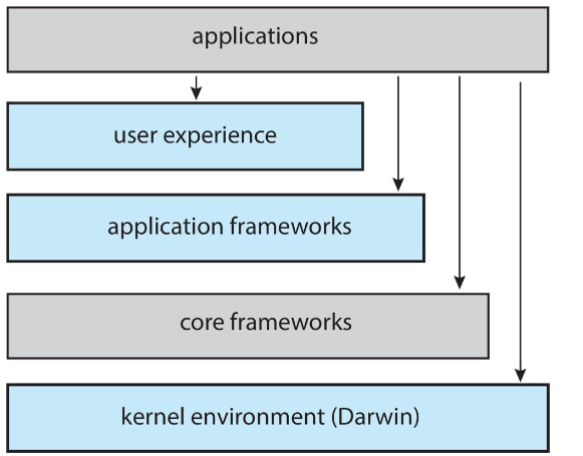

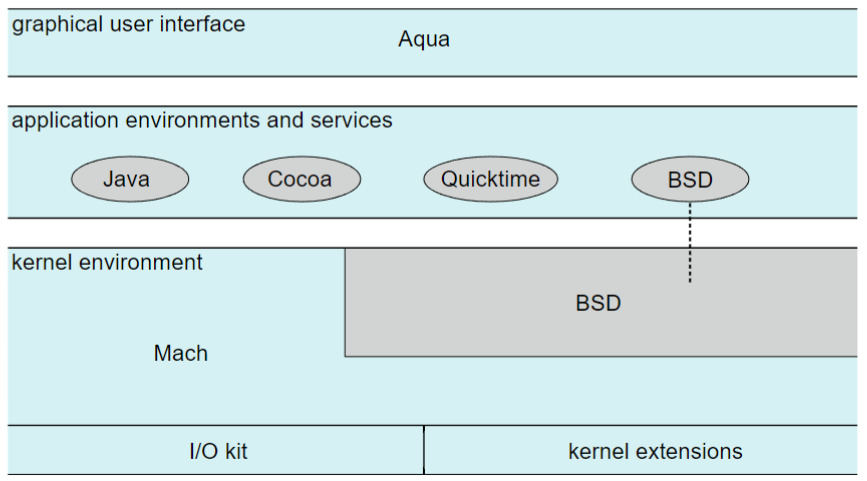

macOS & iOS structure

- in architecture: mcOS & iOS: have much in common

- UI, programming support, graphics, media, and even kernel environment

- Darwin: includes Mach microkernel and BSD UNIX kernel

- with dual base programs

- higher level structure

- Max OS X: hybrid, layered, Aqua UI (mouse / trackpad)

- w/ Cocoa programming environment: API for Objective-C

- core frameworks: support graphics and media

- including Quicktime & OpenGL

- kernel environment (Darwin) includes Mach microkernel & BSD

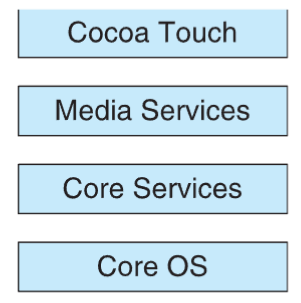

- iOS

- structured on Mac OS; added C functionality

- does not run applications natively; runs on different CPU architectures too (ARM & Intel)

- core OS: based on Mac OS X kernel, but with optimized battery

- Springboard UI: designed for touch devices

- Cocoa Touch: Object-C API for mobile app development (touch screen)

- Media services: layer for graphics, audio, video, quicktime, OpenGL

- Core services: providing cloud computing & data bases

- Core OS: based on Max OS X kernel

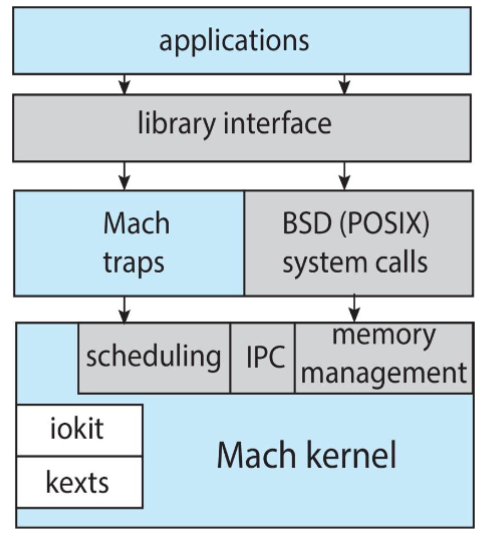

- Darwin

- layered system consisting of Mach microkernel & BSD UNIX kernel

- hybrid system

- two syscall interfaces: Mach syscalls (aka traps) and BSD syscalls (POSIX functionality)

- interface: rich set of libraries - standard C, networking, security, programming languages..

- Mach: providing fundamental OS services

- memory management, CPU scheduling, IPC facilities

- kernel: provide IO kit for device drivers

- dynamically loadable modules: aka kernel extensions (kexts)

- BSD: THE first implementation supporting TCP/IP, etc.

- layered system consisting of Mach microkernel & BSD UNIX kernel

- in architecture: mcOS & iOS: have much in common

-

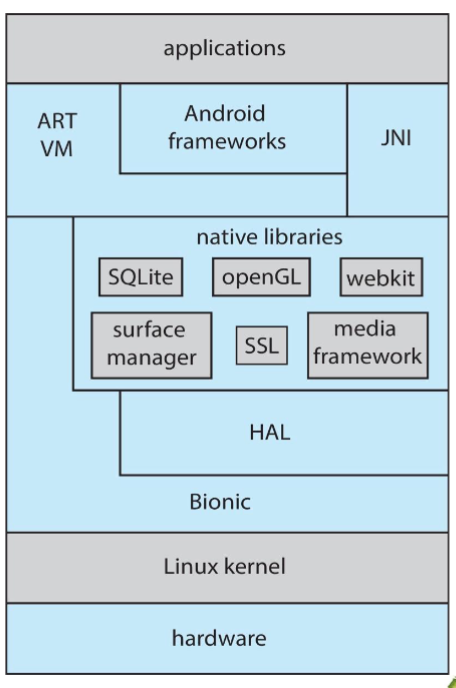

Android

- developed by Open Handset Alliance (led by GOogle)

- runs on a variety of mobile platforms

- open-source, unlike Apple's closed-sources

- similar to OS: layered system providing rich set of frameworks

- for graphics, audio, and hardware

- using a separate Android API for Java development (not standard Java)

- Java app: execute on Android Run Time (ART)

- VM: optimized for mobile devices w/ limited memory & CPU

- Java native interface (JNI): allows developers to bypass CM

- and access: specific hardware features

- programs written in JNI: generally not portable in terms of hardware

- libraries: include framework for:

Webkit: web browser devSQLite: DB supportSSLs: network support (secure sockets)

- hardware abstract layer (HAL): abstracts all hardware

- providing applications w/ consistent view

- independent of hardware

- providing applications w/ consistent view

- uses Bionic standard C library by google, instead of GNU C library for Linux systems

Process: Introduction

-

Introduction to processes

- 👨🏫 a real thing! no more high level!

- objectives:

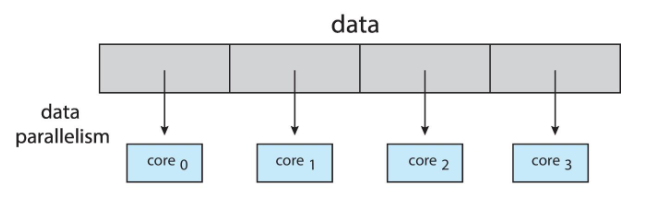

- identify components of process, representation, and scheduling

- describe creation & termination using appropriate syscalls

- describe and contrast inter-process communication w/ shared memory

- and message passing methods

-

Four fundamental OS concepts

- process

- instance of an executing program

- made of address space & more than 1 thread of control

- thread (continues in Ch. 4)

- single unique execution context

- full description of program stated

- captured by program counter, registers, execution flags, and stack

- for parallel operations..?

- address space (w/ address translation)

- programs: execute in address space

- != memory space of physical machine

- each program: starts w/ address 0

- programs: execute in address space

- dual mode operation (= Basic protection)

- user program / kernel: runs in different modes

- different permissions per programs

- OS & hardware: protected from user programs

- user programs: protected & isolated from one another

- process

Process concept

-

Process concept

- OS: executes a variety of programs: each runs as process

- process: captures a program execution, in a sequential manner

- von Neumann architecture

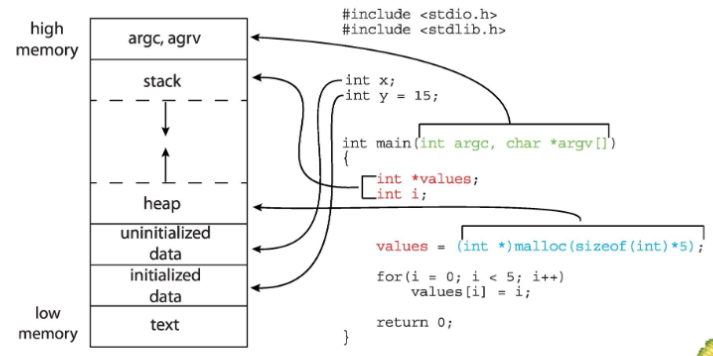

- process: contains multiple parts

- program code: aka text section

- current activities or state: program counter / processor registers

- stack: temporary data storage when invoking functions

- e.g. for function parameters, return addresses, etc.

- data section: containing global variables

- heap: w/ dynamically allocated memory during run time

- 👨🏫 stack & heap can grow dynamically: during execution

- program: passively stored on disk

- process: active entity, w/ PC specifying next instruction to fetch & execute

- process: with life cycle: from execution to termination

- program: becomes a process

- when executable file is loaded into main memory & get ready to run

- executes in address space, different from actual main memory location

- one program: can be executed by multiple processes

- a program: can be an execution environment for others (e.g. JVM)

-

Loading program into memory

- program: must be loaded to memory before execution

- load its code & static data into memory / address space

- some memory: allocated for program's runtime stack

- for C: stack used for local variables, function parameters, return addresses

- fill in: parameters for

main(): e.g.argc,argv

- OS: may allocate memory for program's heap

- programs: request & returns it explicitly

- dO: also d some initialization tasks

- esp. relating to IO

- e.g. UNIX: each process w/ 3 open file descriptors

- standard input / output / error

- esp. relating to IO

-

Memory layout of a program

- global data: divided into initialized data and uninitialized data

- separate section for

argc,argvparameters

COMP 3511: Lecture 6

Date: 2024-09-19 14:59:46

Reviewed:

Topic / Chapter:

summary

❓Questions

Notes

Process Concept (cont.)

-

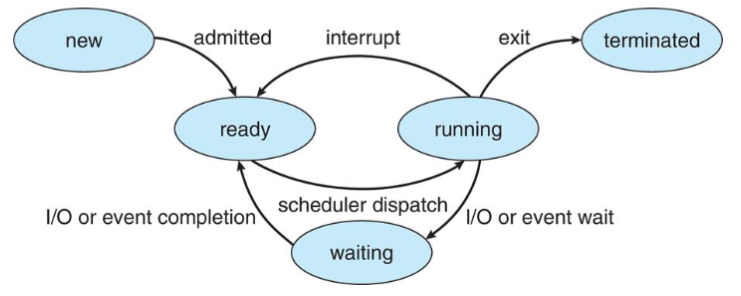

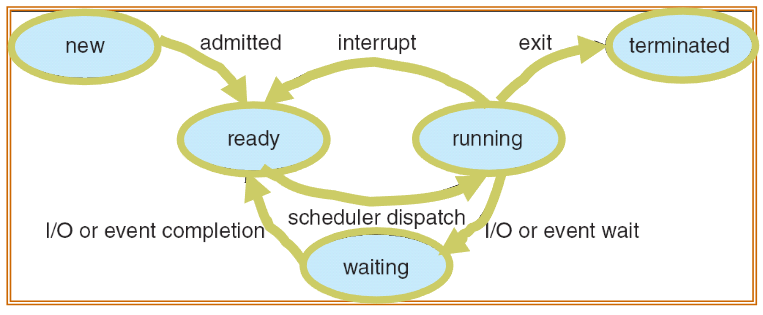

Process state

- traditional Unix: each process = 1 thread

- process state current activity of that process

- as process executes: it changes states

- new: process being created / allocating resource

- ready: process waiting to be assigned to CPU

- 👨🏫 remember ready queue and CPU core!

- running: instructions being fetched & executed

- waiting: process waiting for event to occur

- terminated: process finished execution / deallocating resource

- ❓ how do process manager work? how much CPU resource does it take?

- 👨🏫 will be discussed later (whole chapter)

- different things might happen in running state

exit: finished all tasksinterrupt: after each line of instruction: checks interrupt signal- if detected: add interrupt routine to process queue

I/O || event: I/O service, etc: are much slower than CPU- so the process gives up CPU itself, and waits for signal (from IO / etc.)

- traditional Unix: each process = 1 thread

-

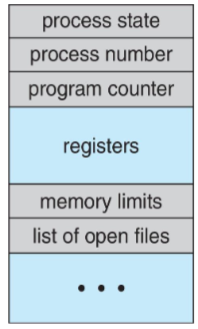

Process control block (PCB)

- each process: corresponds to 1 PCB

- i.e. info. associated w/ each process

- aka: task control block

- includes following info:

process state: running / waiting, etc.program counter: location of instruction to be fetched nextCPU registers: accumulators, index resisters, stack pointers, general-purpose registers, condition-code informationCPU scheduling info.: priorities, pointers to scheduling queue, scheduling parametersmemory-management info.: memory allocated to the process- complicated data structure: paging & segmentation tables

- 👨🏫 for now, consider it as a pointer to program data structure

- 👨🏫 program: simple binary; process: dynamic!

accounting info.: CPU used, clock time elapsed, time limits- simple statistics

IO status info.: IO devices allocated to process- e.g. list of open files

- each process: corresponds to 1 PCB

-

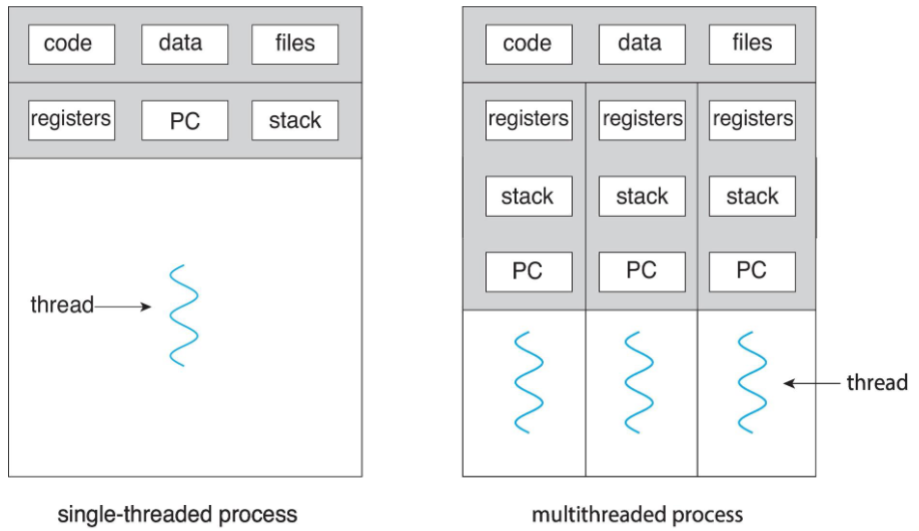

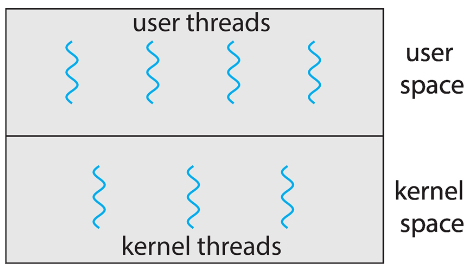

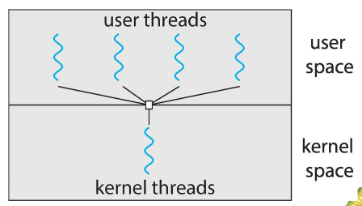

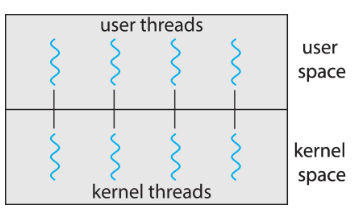

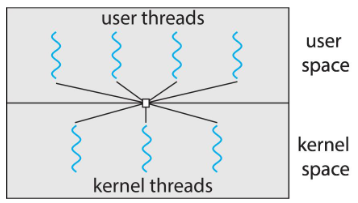

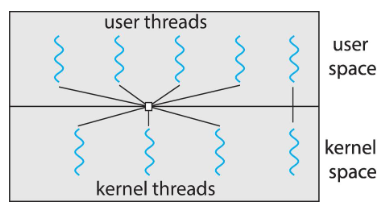

Threads

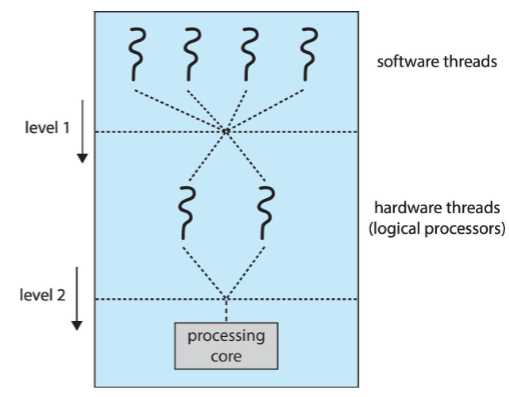

- we assumed: a process w/ single thread of execution

- thread: represented by: PC, registers, execution flags, stack

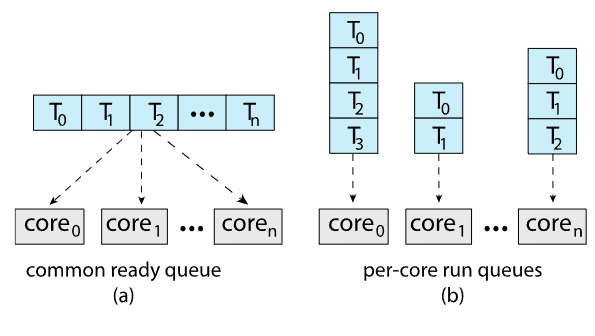

- modern OS: allow a process w/ multiple threads

- thus can perform parallel execution

- e.g. browsers: contacting web server vs. displaying image, etc.

- w/ multi-core systems: multiple threads of process: can run in parallel

- process: can consist of 1 / multiple threads

- running within the same address space (e.g. sharing code & data)

- each thread: w/ its own, unique state

- multi-threads: process providing concurrency & active components

- we assumed: a process w/ single thread of execution

-

Process representation in Linux

- Linux: storing list of processes in circular doubly LL

- aka task list

- each element in task list: of type struct

task_struct- i.e. PCB

pid t_pid; long state; unsigned int time_slice; struct task_struct *parent /* TBD */ struct list_head children /* TBD */ struct files_struct *files /* TBD */ struct task_struct *parent /* TBD */ - Linux: storing list of processes in circular doubly LL

Process Scheduling

-

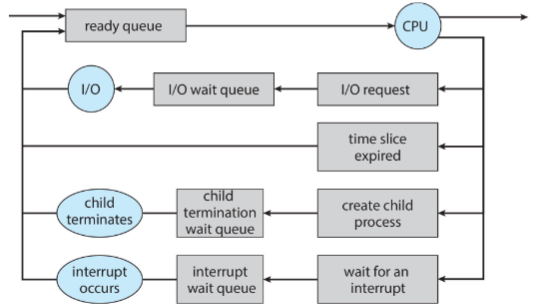

Process schedule

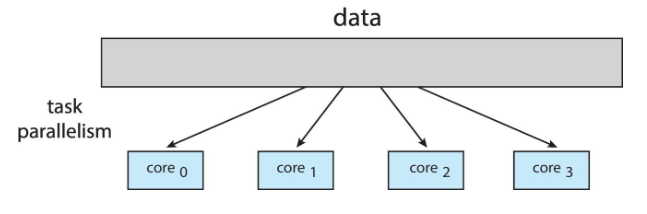

- primary object of multiprogramming: keep CPU busy

- as it's a scars resource

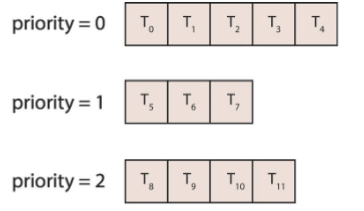

- process scheduler: OS mechanism selecting a process

- from available processes inside main memory

- scheduling criteria: CPU utilization, job response time, fairness, real-time guarantee, latency optimization...

- degree of multiprogramming: no. of processes currently residing in memory

- determines resource consumption

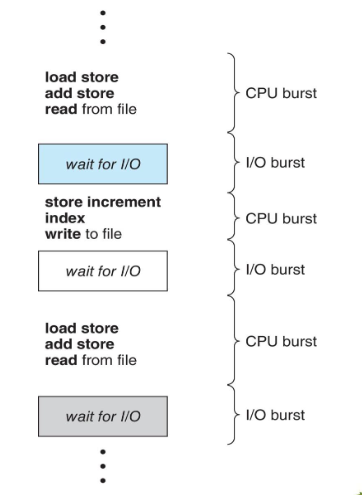

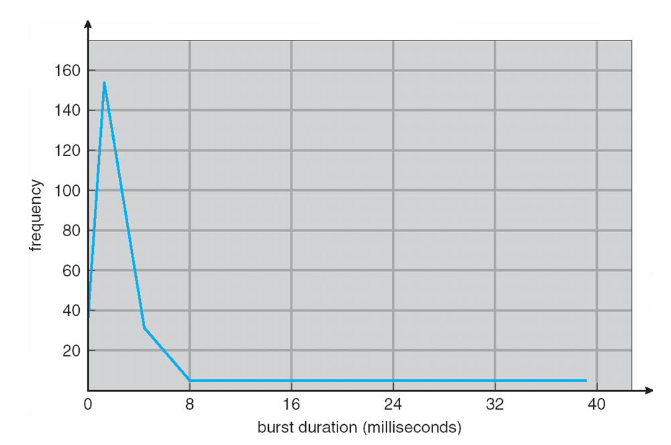

- processes: roughly either:

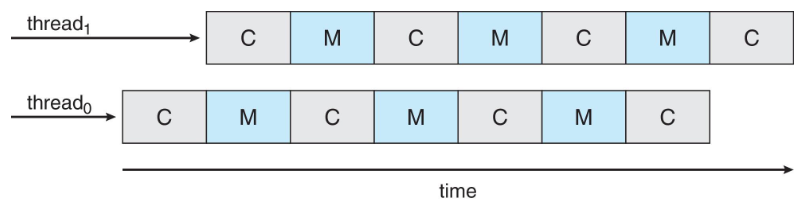

- I/O bound process: spends more time w/ IO than computations

- many short CPU bursts

- CPU bound process: generates IO requests infrequently

- more time doing computations; few, very long CPU bursts

- I/O bound process: spends more time w/ IO than computations

- primary object of multiprogramming: keep CPU busy

-

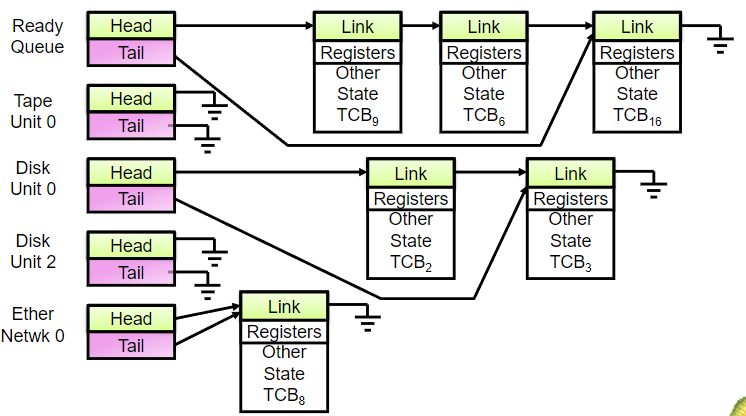

Scheduling queue

- OS: maintains different scheduling queues of processes

- ready queue: set of processes in main memory;

- ready & waiting to execute

- wait queue: set of processes waiting for an event

- ready queue: set of processes in main memory;

- processes: migrate among various queues during lifetime

- OS: maintains different scheduling queues of processes

-

Representation of process scheduling

-

Schedulers

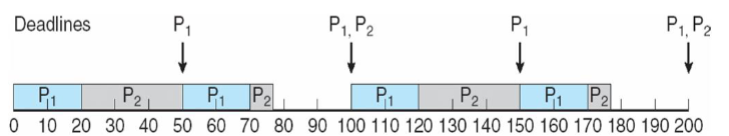

- long-term scheduler (job scheduler)

- for scientific & heavy computation

- selects which processes to be brought to memory

- for mainframe minicomputers (not PC)

- made of job queue: hosts job submitted to mainframe computers

- short-term scheduler (CPU scheduler)

- selects a process from a ready queue to be executed next & allocate CPU for it

- short-term scheduler: invoked frequently (μs, must be fast)

- long-term scheduler: invoked infrequently (s,min, may be slow)

- long term scheduler: dictates degree of multiprogramming

- mainframe computer system:

- long-term scheduler (job scheduler)

-

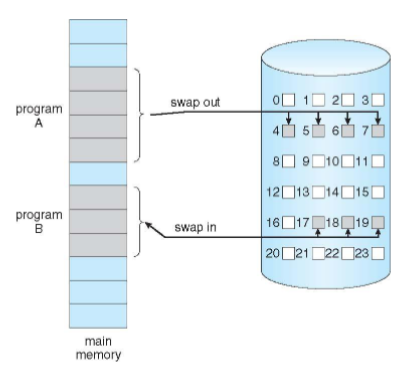

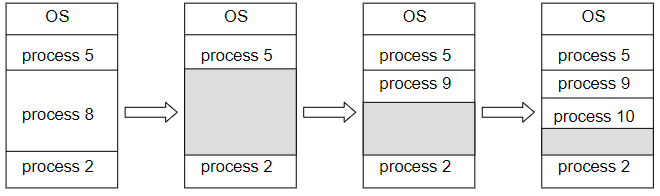

Medium term scheduling

- if you underestimated the program resource:

- swap out (not terminating) some programs

- entire process information (not just PCB)

- and use the freed up resource to accelerate & give more space

- swap out (not terminating) some programs

- for PC: you can simply kill the resources

- for mainstream: it's all services that's paid

- mostly not implemented for PC

- if you underestimated the program resource:

-

Inter-process CPU switch

- context switch: when CPU switches from one process to another

- sample OS task

- save state into PCB-0

- reload state from PCB-1

- save state into PCB-1

- reload from PCB-0

- ...

- sample OS task

- context: must be saved (in PCB / in each thread of process)

- typically: including registers, PC, stack pointer

- context-switch time: an overhead

- system: doesn't do useful work during it

- takes longer if OS & PCBs are complex

- switching speed: depends on

- memory speed

- no. of registers copied

- existence of a special instructions to load & store all registers

- speed: highly dependent on underlying hardware

- e.g. some processors: provide multiple sets of registers

- context switch: simply changing the pointer to a different register set

- context switch: when CPU switches from one process to another

Dual-mode Operation

-

Dual mode operation

- allows OS to protect itself & other system components

- 👨🏫 MS-DOS didn't have it; Windows have

- user mode: has less access / permission to hardware than the kernel mode

- can be easily extended to multiple modes!

- ❓ sudo = executing as the lowermost user?

- dual-mode operation provides: basic means of OS protection & users

- from errant / bad users

- all access: must be strictly controlled by well-defined OS API

- switching between modes: constant

- e.g. (user) API call to open -> (kernel) open -> (user) displays

-

Three types of mode transfer

- 3 common ways to access kernel mode from user mode

- system call:

- interrupt:

- trap / exception:

Operations on Processes

-

Operations on processes

- processes in most systems: execute concurrently;

- created & deleted dynamically; thus OS must support

- process creation: running a new program

- process termination: program execute completes / terminate

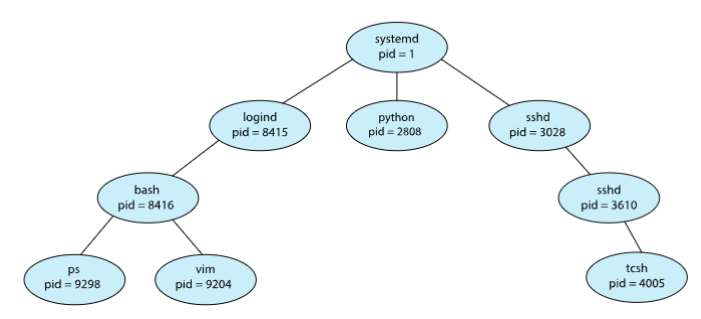

- parent process: can create children processes

- children: can create other child processes

- tree of processes!

- 👨🏫 shell: also a process!

- waiting for your input

- all commands running on it: children process of shell

desktop: can be a parent process- process started on it (e.g. by double click): its children process

- the grandpa:

rootprocess

-

Tree of processes on Linux system

-

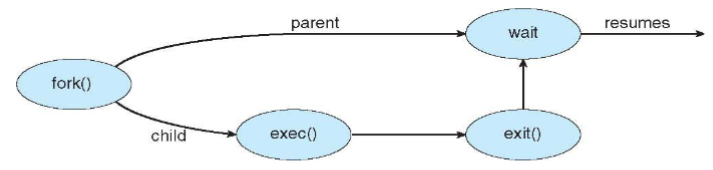

Process creation

- most OS: identify process according to unique process identifier

- or:

pid, usually an integer

- or:

- child process: needs certain resources

- CPU time, memory, files, IO devices to accomplish task

- parent process: also pass along initialization dat (input)

- to child process

- parent & children: share "almost" all resources

- three choices possible on how to share the resource

- parent & children: share almost all resources:

fork() - children: share only subset of parent's resources:

clone() - parent & children: share no resources

- parent & children: share almost all resources:

- two options for execution after process creation

- parent: continues to execute concurrently with its children

- parent: wait until some / all its children to terminate

- again, two choices of address space for a new child process

- child process: duplicates of parent (same program & data) - Unix/Linux

- child process: has a new program loaded into it (different program & data) - Windows

- UNIX examples

fork(): creates a new process; duplicates entire address space of the parent- both processes: continue execution at the next instruction after

fork()

- both processes: continue execution at the next instruction after

exec(): maybe used afterfork(): to replace child process's address space w/ a new program- loading a new program

- parent: can call

wait()to wait for a child to finish

- most OS: identify process according to unique process identifier

COMP 3511: Lecture 7

Date: 2024-09-24 14:35:41

Reviewed:

Topic / Chapter:

summary

❓Questions

Notes

Operation on Processes (cont.)

-

Process creation

-

fork()

- parent & child: can be added to ready queue parallelly

- behavior: cannot be determined by code only

- parent & child: resumes execution after

forkw/ the same PC- i.e. the return of

fork()call

- i.e. the return of

- after

- return values of

fork()- each process: receives exactly one return value

-1: unsuccessful (to parent)0: successful (to child)>0: successful (to parent)- returned value: child's PID

- 👨🏫 losing track of PID / etc.: not discussed here

- 👨🏫 this value: used to distinguish post-fork behavior of process

- almost everything of the parent gets copied

- memory / file descriptors / etc.

- i.e. such copy of everything: very costly & time consuming

- parent can't access child's cloned memory

- nor the child access parent's original memory

- parent & child: can be added to ready queue parallelly

-

UNIX fork

- create & initialize process control block (PCB) in kernel

- create new address space / allocate memory

- initialize address space w/ the copy of entire contents

- time consuming!

- inherit the execution context of the parent (e.g. open files)

- i.e. all stack and etc. information

- inform the CPU scheduler: child process is ready to run

-

parent & child comparison

- after

fork()

Duplicated Different address space PID global-local var fork()returncurrent working dir running time root dir running state process resources resource limits program counter ... example

#include <stdlib.h> #include <stdio.h> #include <string.h> #include <unistd.h> #include <sys/types.h> #define BUFSIZE 1024 int main(int argc, char *argv[]) { char bug[BUFSIZE]; size_t readlen, writelen, slen; pid_t cpid, mypid; pit_t pid = getpid(); printf("Parent pid: %d\n", pid); cpid = fork(); // branch if (cpid > 0) { mypid = getpid(); // parent printf("[%d] parent of [%d]\n", mypid, cpid); } else if (cpid == 0) { mypid = getpid(); // child printf("[%d] child\n", mypid); } else { perror("Fork failed"); exit(1); } }- note that

- output after printing (i.e. within control flow) has undetermined order

- depending on CPU scheduler

- output after printing (i.e. within control flow) has undetermined order

- after

-

-

system calls

-

exec(), execlp(): syscall to change program in current process- creates a new process image from: regular executable file

- 👨🎓 ~=

jto another program?- ⭐ no return to original process!

-

wait(): syscall to wait for child process to finish- or: on general wait for event

- 👨🏫 enter waiting stage & give up CPU

- 👨🎓 if parent keep occupying CPU: then a single-core won't be able to be execute a fork w/

wait() - ⭐ very very important!

- 👨🎓 if parent keep occupying CPU: then a single-core won't be able to be execute a fork w/

-

exit(): syscall to terminate current process-

- free all resources

-

-

signal(): syscall to send notification to another process -

implementing a shell

char *prog, **args; int child_pid; while (readAndParseCmdLine(&prog, &args)) { child_pid = fork(); if (child_pid == 0) { exec(prog, args); // run command in child // cannot be reached } else { wait(child_pid); return 0; } } -

fork tracing

- to trace forks in loops, try to expand the loop

- e.g.

for (int i=0; i < 10; ++i) fork();intofork(); fork(); fork();...

- e.g.

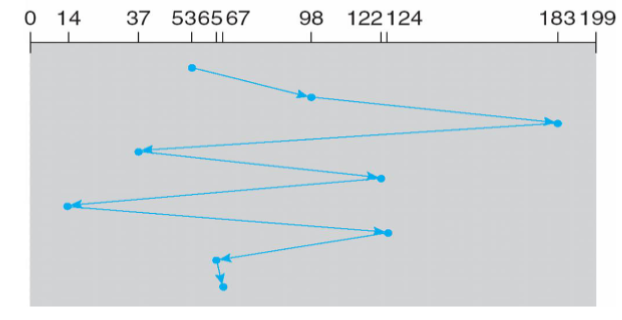

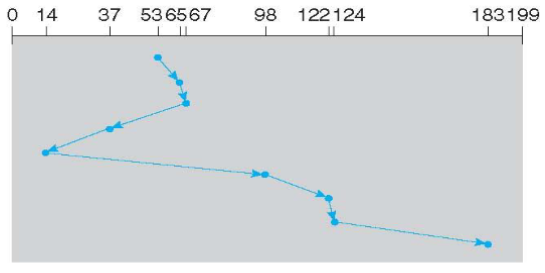

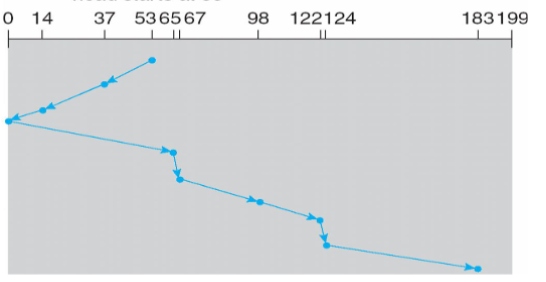

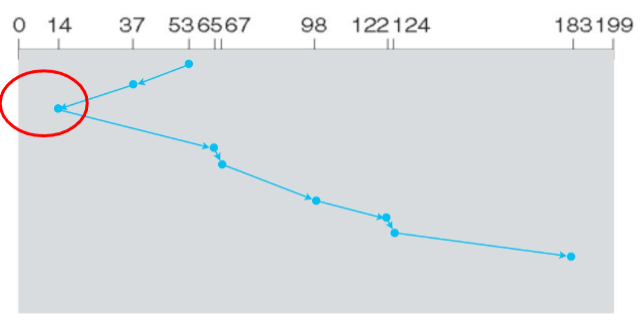

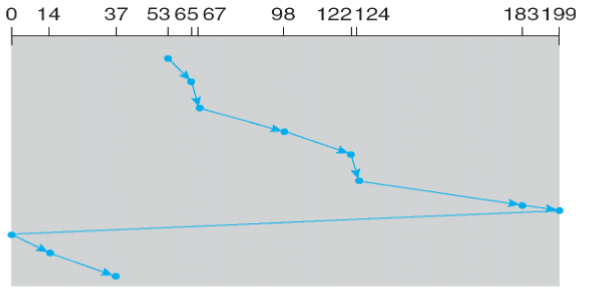

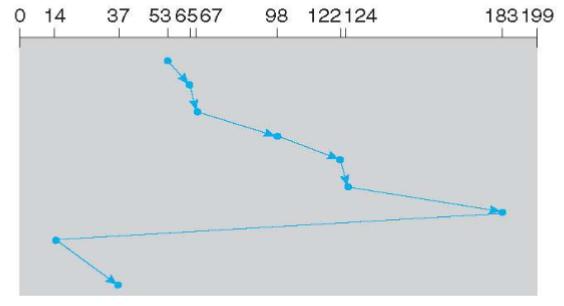

fork diagrams (credit: IA Peter)

- to trace forks in loops, try to expand the loop

-

---

title: fork()

---

flowchart LR

p0((p0)) --> f1{fork 1}

f1 --> p0_2((p0))

f1 --0--> p1((p1))

---

title: fork(); fork()

---

flowchart LR

p0((p0)) --> f1{fork 1}

f1 --> p0_2((p0))

f1 --0--> p1((p1))

p0_2 --> f2{fork 2}

f2 --> p0_3((p0))

f2 --0--> p2((p2))

p1 --> f3{fork 3}

f3 --> p1_2((p1))

f3 --0--> p3((p3))

---

title: fork()&&fork()

---

flowchart LR

p0((p0)) --> f1{fork 1}

f1 --> p0_2((p0))

f1 --0--> p1((p1))

p0_2 --> f2{fork 2}

f2 --> p0_3((p0))

f2 --0--> p2((p2))

---

title: fork()||fork()

---

flowchart LR

p0((p0)) --> f1{fork 1}

f1 --> p0_2((p0))

f1 --0--> p1((p1))

p1 --> f2{fork 2}

f2 --> p1_2((p1))

f2 --0--> p2((p2))

</details>

-

Final notes on fork

- process creation is unix: unique (no pun)

- most os: create a process in new address space & read in an executable file and execute

- Unix: separating it into

fork()andexec()

- linux:

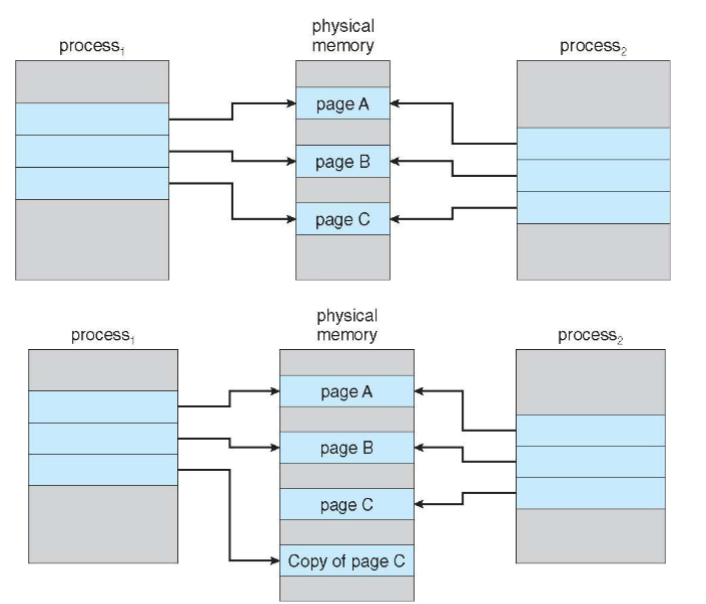

fork(): implemented viacopy-on-write- as usually: we don't need the entire copy

- thus we can delay / prevent copying the data

- until content is changes / written to

- improves speed a lot!

- thus we can delay / prevent copying the data

- child process: points to parent process's address space

- as usually: we don't need the entire copy

- linux also implements

fork()viaclone()(more general)clone(): uses a series of flags allow to specify which set of resources should be shared by parent & child

- process creation is unix: unique (no pun)

-

Process termination

- termination

- after process executes the last statement

exitsyscall: used to ask OS to delete it

- after process executes the last statement

- some os: do not allow child to exist if parent has terminated

- i.e. all children are to be terminated after parents

- cascading termination by the OS

- i.e. all children are to be terminated after parents

- process termination:

- deallocation: must involve the OS

- e.g. kernel data, etc.: cannot be accessed / modified by the user application

- concepts

- zombie process: process terminated, but

waitnot called on parents yet- e.g. corresponding entry in the process table / PID, and PCB

- 👨🏫 every process enters this stage, at least for a moment after termination

- nothing wrong. It's just "we are almost over"

- but zombies can be accumulated, and wit as a problem back then

- because the memory restriction was very tight ~=30 years ago

- once parent calls wait:

PIDof zombie process and other corresponding entry: released - such design: enables parent inform the OS termination of the child

- 👨🏫 not the best design, nor the only. but the design of Unix

- if parent terminates without invoking

wait()- child becomes an orphan

- without cascading, the process might be still runnable

- or become a zombie

- which, will never be released, as no parent exist

- thus: all process (except root or so): must have a parent

- (or kill them all using cascading)

- 👨🎓 can we assign

- 👨🏫 this is the design chosen by UNIX

- 👨🎓 can't we ensure the child to call OS and take care of themselves?

- maybe we can, but this is choice or trade-off made by the unix

- 👨🏫&👨🎓 parent has ability to track all its children, but it is not required.

- 👨🎓 can't we ensure the child to call OS and take care of themselves?

- zombie process: process terminated, but

- termination

COMP 3511: Lecture 8

Date: 2024-09-26 15:11:13

Reviewed:

Topic / Chapter:

summary

❓Questions

Notes

Communication

-

General

-

Communication models

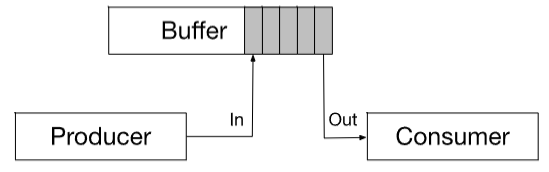

- producer-consumer problem

- represents common type of inter-process cooperation

- producer: produces information (& puts into buffer)

- consumer: consumes information (e.g. display)

- unbounded buffer: places no practical limit on the size of the buffer

- bounded buffer: assumes: fixed puffer size

- producer-consumer problem

-

Shared memory

-

shared data

- bounded buffer: pseudocode

#define BUFFER_SIZE 10 typedef struct { ... } item; item buffer[BUFFER_SIZE]; int in = 0; int out = 0; - allows at most

BUFFER_SIZE-1items in buffer at same time- "one" of the common communication method

- different implementations: can use all

BUFFER_SIZEitems

Pseudocode

s - bounded buffer: pseudocode

-

-

Message passing

- mechanism for communication & synchronization

- without sharing the same address

- particularly useful: in distributed environment

- IPC facility: provides

send (message)receive (message)

- message size: can be either fixed / variable

- in order to processes to communicate, they need to

- establish a communication link between them

- 👨🏫 need to know somehow each other's identity!

- such processes can eue either direct / indirect communication

- exchange messages visa primitives:

send(),receive()

- establish a communication link between them

- different methods exist

-

Direct communication

- processes: must name each other explicitly

- usually: PID, but can be acronyms, etc.

send(P,m)

- d

- processes: must name each other explicitly

-

Indirect Communication

- messages: sent to and received from mailboxes

- each mailbox: w/ unique id

- processes: can communicate only if they share a mailbox

- properties of communication link

- link: established written a pair of processes

- only if both members of pair: w/ shared mailbox

- d

- link: established written a pair of processes

- basic mechanism to support:

- create a new mailbox

- send & receive messages through the mailbox

- destroy the mailbox

- primitives: defined as

send(A,message): send message to mailbox:send(A,message): receive message from mailbox:

- mailbox sharing

- : shares mailbox

- can receive message from ?

-

solutions

- messages: sent to and received from mailboxes

-

Synchronization

- message passing: either blocking / non-blocking

- or: synchronous - asynchronous

- 4 possibilities

- status of sender & receiver

- blocking: considered synchronous

- blocking send: sending process: blocked until message is received

- i.e. I'll until you confirm

- blocking receive: receiver blocks until a message is available

- i.e. give me message! give me message! give me message!

- blocking send: sending process: blocked until message is received

- non-blocking: considered asynchronous

- non-blocking send: sending process: sends message & resumes operation

- not waiting for message reception

- non-blocking receive: receiver: retrieve either a valid message / null

- rendezvous: when

send()andreceive()are both blocking- i.e. common place

- pseudocode

- producer-consumer: becomes trivial

- message passing: either blocking / non-blocking

-

Buffering

- either direct / indirect, messages exchange by communicating processes reside in a temporary queue

- queue: either in three ways

- zero capacity: no messages are queued on a link

- sender: must wait for receiver

- zero capacity: no messages are queued on a link

- sender: must wait if link is full

- unbounded capacity: infinite length

- sender never waits

- zero capacity: no messages are queued on a link

-

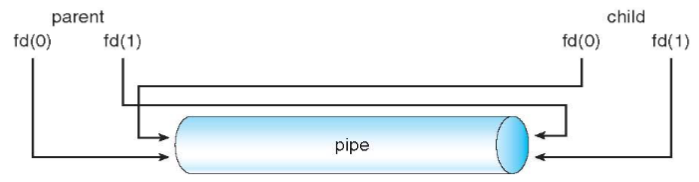

Pipes

- piped: one of the first IPC mechanism in early UNIX systems

- is communication: unidirectional / bidirectional?

- must there exists a relationship between processes?

- e.g. parent-child

- can the pipes:used over a network?

- or only on the same machine?

- ordinary pipes: cannot be accessed from outside the process that created it

- typically: parent process creates a pipe

- and uses it to communicate with child, made by

fork()

- names pipes: can be accessed without a parent-child relationship

- destroyed by the OS upon process termination

- piped: one of the first IPC mechanism in early UNIX systems

-

Ordinary pipes

- communicates in a standard producer-consumer fashion

pipe(int fd[])in unix: unidirectional- producer: writes to one end (write end,

fd[1]) - producer: reads from end (read end,

fd[0])

- producer: writes to one end (write end,

- requires parent-child relationship

- cannot be accessed from outside the process created them

- cease to exist after processes finish communication & terminated

- unix: treats pipe as a special type of file

- standard

read(),write(), and the child: inherits pipe from parent process - parent process: use

pipe()to create pipe- then use

forkto create a child process- child: inherits pipe as well

- 👨🏫 inherited fo one child only, in original UNIX

- then use

- standard

- example code

-

Names pipes

- more powerful than ordinary pipes!

- bidirectional

- no parent-child relationship required

- multiple process can use it (w/ several writers)

- names pipes: continues to exist after processes terminate

- must be explicitly deleted

- names

- more powerful than ordinary pipes!

-

Client-server communication

- sockets

- defined as: endpoint for communication

- pair of process communicating over a network:

- employs a pair of socket

- uniquely identified by an IP address and a port number

- port: specifies process / application on client / server side

- server: waits for incoming client requests

- by listening to a specified port (well-knowns)

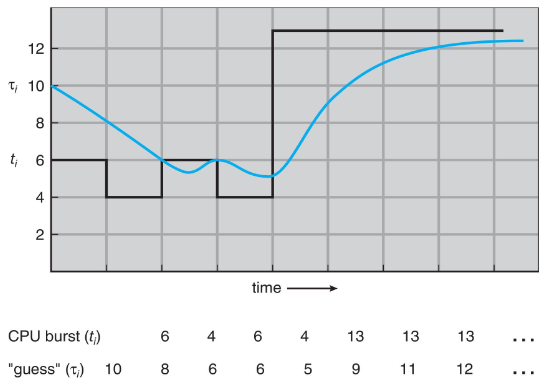

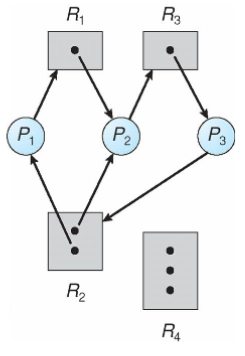

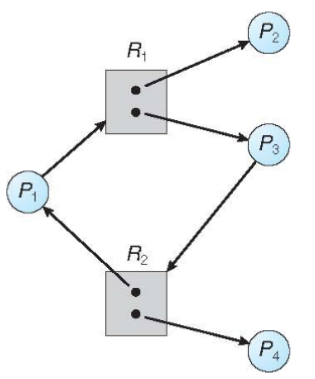

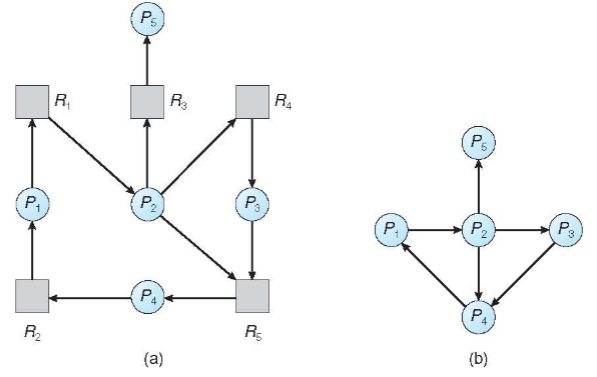

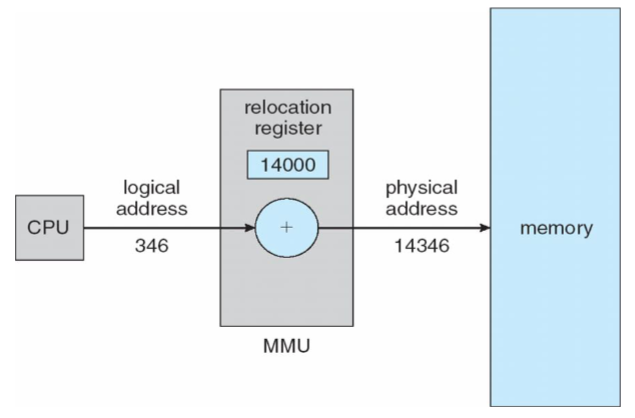

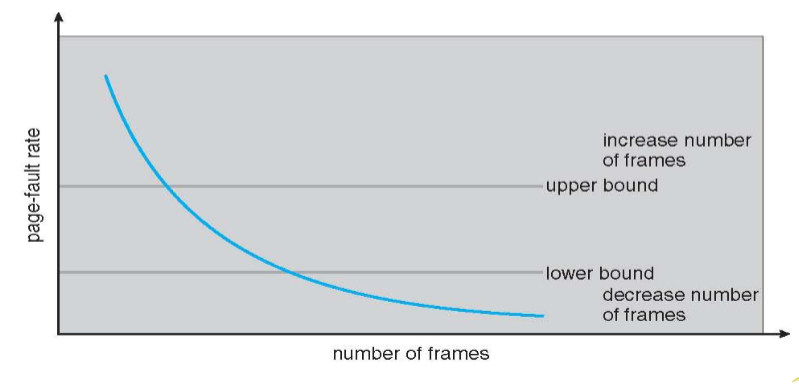

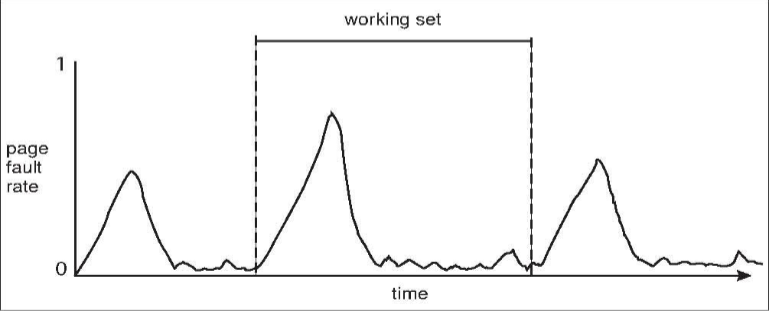

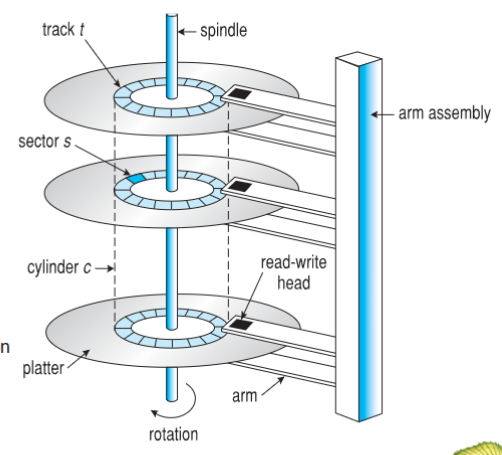

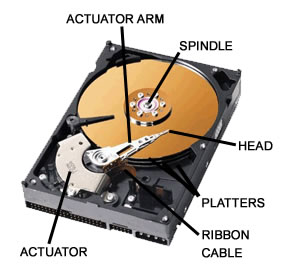

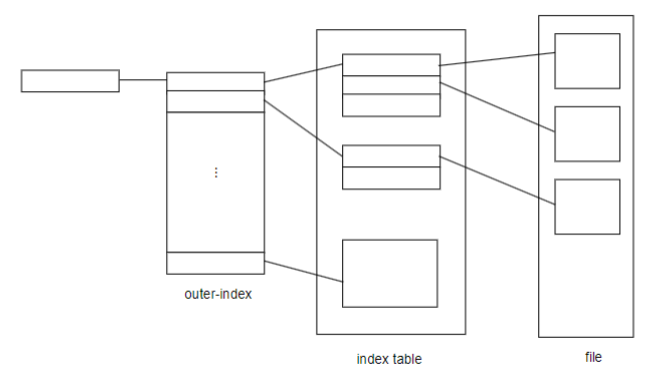

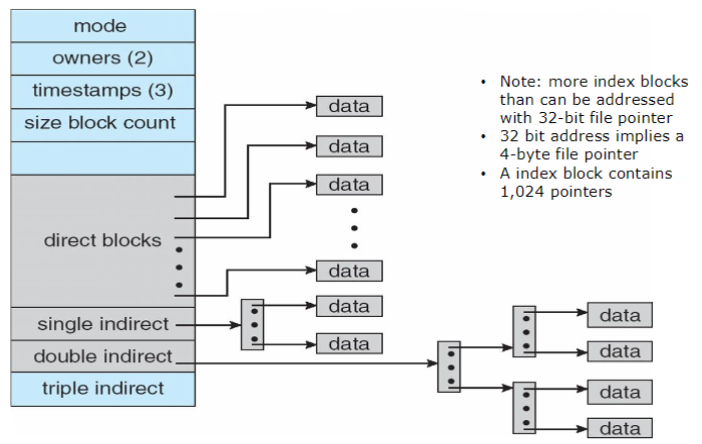

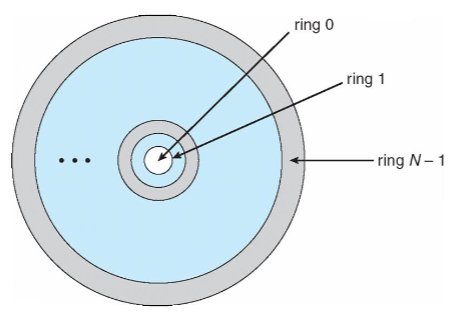

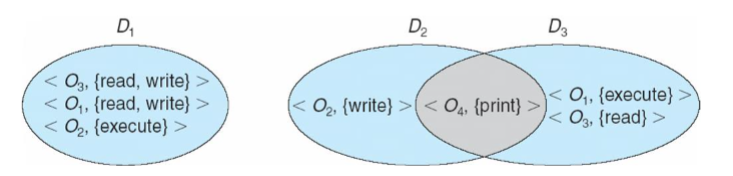

- once received: server can choose to accept the request